Understanding DeepSpeed: Microsoft’s Game-Changing Optimization Library for Large-Scale AI Models

As artificial intelligence (AI) models grow increasingly large and complex, the demand for more efficient ways to train and infer from them has become a critical challenge. One tool that stands out in addressing this issue is DeepSpeed, an open-source optimization library developed by Microsoft. Designed to improve the training and inference of large-scale AI models, it plays a crucial role in powering the latest advancements in AI, particularly those relying on Transformer architectures and language models with billions of parameters.

In this article, we’ll explore what makes it a valuable tool in the world of AI, including its core features, innovations, and key advantages compared to other optimization libraries.

Table of Contents

What is DeepSpeed?

DeepSpeed is a deep learning optimization library that offers cutting-edge solutions for training large-scale models efficiently. Developed by Microsoft, it integrates seamlessly with the PyTorch framework and has gained significant traction in the AI community due to its ability to scale models up to 1 trillion parameters while optimizing memory usage and improving training speeds.

From language models like BERT and Turing-NLG to AI research projects, it has been instrumental in unlocking new possibilities by pushing the limits of model size, training time, and resource efficiency.

Core Features of DeepSpeed

- Zero Redundancy Optimizer (ZeRO):

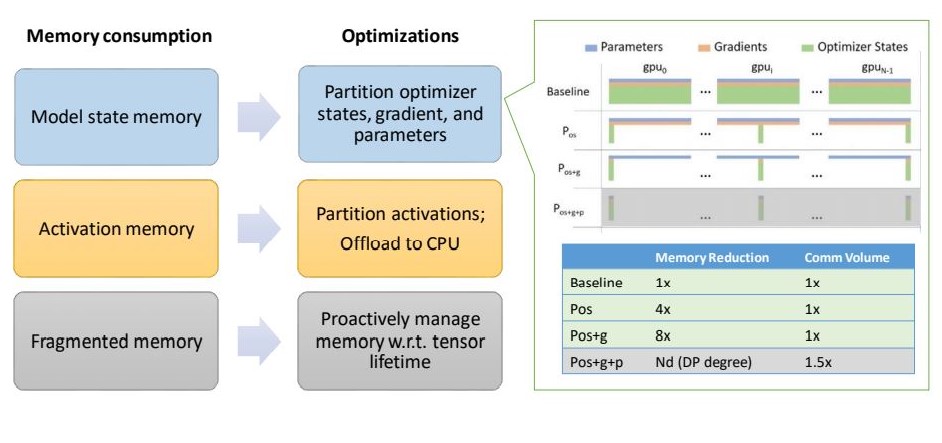

At the heart of DeepSpeed is the ZeRO (Zero Redundancy Optimizer), a highly efficient optimization technique that enables models with up to 1 trillion parameters to be trained by optimizing memory usage across GPUs. ZeRO reduces memory consumption by partitioning the optimizer states, gradients, and parameters across multiple GPUs, which allows for better resource utilization. This makes DeepSpeed a leading tool for memory-efficient distributed training.

- High Performance:

It shines when it comes to high-throughput training. For example, it has been shown to train BERT-large in just 44 minutes using 1024 V100 GPUs. This performance improvement is a major draw for organizations and researchers working with time-sensitive projects. - Flexible Model Training:

Whether you are working with single-GPU, multi-GPU, or multi-node setups, it offers flexibility that suits various training configurations. It is designed to require minimal changes to existing PyTorch code, making it easy for developers and researchers to adopt. - Advanced Inference Capabilities:

It doesn’t just excel in training—its inference optimizations are equally impressive. By leveraging tensor and pipeline parallelism, it ensures low-latency and high-throughput execution during inference, making it well-suited for deployment in real-time applications.

DeepSpeed Innovations

Microsoft’s innovation is evident across various areas in DeepSpeed:

- DeepSpeed-Training:

This includes features like 3D parallelism and ZeRO-Infinity, which together enable the efficient scaling of AI models. 3D parallelism combines data, tensor, and pipeline parallelism to further enhance performance at scale. - DeepSpeed-Inference:

It focuses on optimizing inference with high-performance kernels and communication strategies, which enable efficient execution for large-scale models. - DeepSpeed-Compression:

Compression techniques help reduce model sizes without sacrificing performance, making it easier to deploy models in environments with constrained resources, such as edge devices.

Comparing DeepSpeed with Other Optimization Libraries

DeepSpeed has distinguished itself from other deep learning libraries through its unique combination of features and performance improvements. Let’s take a closer look at how it compares:

- Memory Optimization:

It uses the ZeRO technique to reduce memory usage by partitioning data across multiple devices. This allows it to handle models with up to 200 billion parameters efficiently, compared to libraries like PyTorch Distributed Data Parallel, which often replicate model states across devices, leading to higher memory consumption. - Ease of Integration:

It is highly compatible with PyTorch and requires minimal changes to the original model code, making it an attractive choice for researchers who want to implement optimization techniques quickly. In contrast, other libraries may require extensive modifications to integrate effectively. - Training Speed:

It has been demonstrated to train large models 10x faster than traditional methods, making it a top performer for projects that rely on high-speed training. Other libraries may not achieve the same level of performance, especially for models at the extreme high end of the scale. - Support for Sparse Models:

It supports both dense and sparse models, making it versatile across different AI tasks. In comparison, many libraries focus primarily on dense models and may require additional configurations for sparse model training.

DeepSpeed’s Inference Optimization

In addition to training, it offers several innovations that greatly enhance inference performance for large-scale models. These are particularly important for real-world AI applications, where inference speed and cost-efficiency are critical.

- Inference-Adapted Parallelism:

This feature dynamically selects the best parallelism strategies for multi-GPU setups, optimizing for both latency and cost. It achieves up to 6.9x better performance than traditional methods, making it ideal for deploying large-scale AI models. - Optimized CUDA Kernels:

It employs specialized CUDA kernels that maximize GPU utilization using techniques like deep fusion and advanced kernel scheduling. This results in speedups ranging from 1.9x to 4.4x depending on the task. - Tensor-Slicing Parallelism:

It automatically partitions model parameters across multiple GPUs, reducing latency and enabling parallel execution—a critical feature when working with billion-parameter models. - Quantization Support:

DeepSpeed also provides support for quantized inference, allowing models to run with reduced precision while maintaining high accuracy. This translates into lower memory usage and faster inference times, all without compromising performance.

Adoption in the AI Community

DeepSpeed has been rapidly adopted by the deep learning community and is used to train some of the largest language models available today. Its ability to scale massive models while maintaining high performance and low resource overhead makes it a go-to choice for researchers and organizations looking to push the limits of deep learning.

Moreover, the open-source nature of DeepSpeed encourages collaboration and contributions from a broad user base, ensuring continuous improvement and innovation. With backing from Microsoft Research and integration into cutting-edge AI projects, it is becoming indispensable for advanced AI development.

Conclusion

DeepSpeed represents a significant leap forward in deep learning optimization, offering powerful tools that enable the efficient training and inference of large-scale models. Its Zero Redundancy Optimizer (ZeRO), innovations in parallelism, and support for both dense and sparse models make it an essential tool for modern AI researchers.

With its ease of integration, advanced features, and high performance in both training and inference, it stands out as a leading solution for organizations and developers looking to scale their AI models efficiently.

Whether you’re working on the next billion-parameter language model or optimizing AI solutions for real-time applications, it delivers the necessary performance and flexibility to make these projects possible.