Transformers, Diffusion Models, and RNNs: A Comprehensive Comparison

Introduction

Over the past few years, we’ve seen machine learning evolve at a breakneck pace, bringing about innovations that were once the stuff of science fiction. At the heart of this revolution are three standout model architectures: Transformers, Diffusion Models, and Recurrent Neural Networks (RNNs). These models have been game-changers, each bringing something unique to the table. Transformers have redefined natural language processing, making it possible for machines to understand and generate human language with astonishing accuracy. Diffusion Models are pushing the boundaries of image generation, creating visuals that are nearly indistinguishable from real photos. RNNs have been invaluable for making sense of sequential data, helping us analyze everything from time series to speech recognition. In this article, we’ll take a journey through these powerful models. We’ll unpack their architectures, look at how they’re being applied in various fields, and discuss the pros and cons of each. Whether you’re new to machine learning or looking to deepen your understanding, this overview aims to shed light on the cutting-edge tools that are shaping our digital world.

Overview of Each Model

Transformers

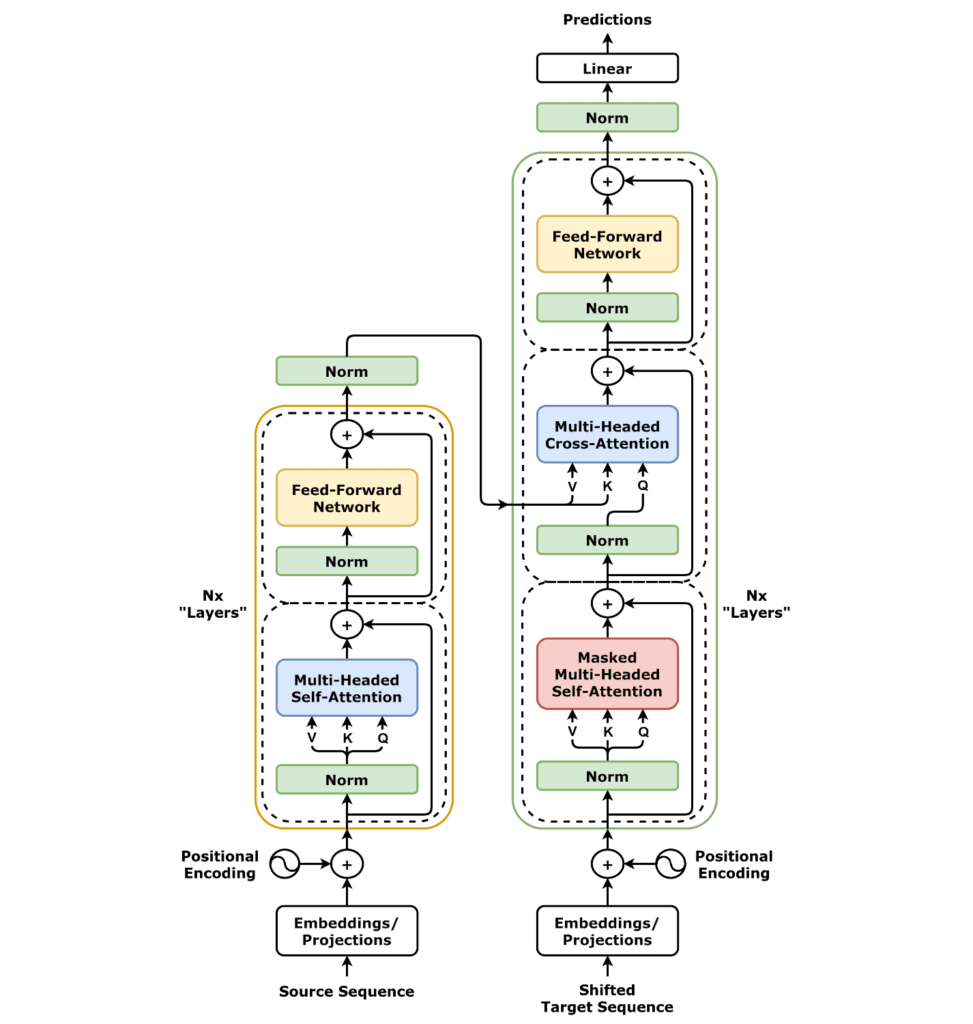

Transformers have been nothing short of revolutionary in the field of natural language processing (NLP). Introduced by Vaswani et al. in 2017, the Transformer architecture brought a paradigm shift with its self-attention mechanism. Unlike traditional sequential models that process data step by step, Transformers can handle entire sequences of data simultaneously. This parallel processing capability allows them to capture long-range dependencies more efficiently than ever before. So, what does this mean in practical terms? Well, consider machine translation. Translating a sentence from one language to another requires understanding the context and nuances throughout the entire sentence. Transformers excel at this by weighing the importance of each word in relation to others, thanks to self-attention. This has led to significant improvements in translation accuracy and fluency.

But the impact of Transformers isn’t limited to text. They’ve also made inroads into computer vision with models like Vision Transformers (ViT), which apply the same self-attention principles to image recognition tasks. By treating image patches as sequences, these models have achieved impressive results in image classification and even in generating new images.

The scalability of Transformers has given rise to large-scale models like BERT and GPT, which have set new benchmarks in various NLP tasks. These models can generate human-like text, summarize documents, answer questions, and more. The ability to train on massive datasets while capturing complex patterns has opened up possibilities we couldn’t have imagined just a few years ago.

Diffusion Models

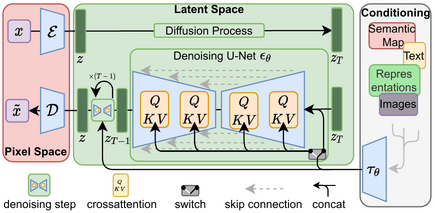

Diffusion Models are emerging as powerful tools in the realm of generative modeling, particularly for image synthesis. At their core, these models take a fascinating approach: they start with pure noise and learn to transform it into coherent data through a gradual denoising process. Think of it like developing a photo from a blank, grainy image into a clear picture, step by step.

This iterative process allows Diffusion Models to generate high-quality, diverse samples that are remarkably detailed. They’ve been responsible for some of the most impressive image generation results to date, producing visuals that are often indistinguishable from real photographs. Applications range from creating art and animations to assisting in medical imaging by generating synthetic data for training purposes. One of the standout features of Diffusion Models is their stability during training. Unlike other generative models like GANs (Generative Adversarial Networks), which can be notoriously difficult to train due to issues like mode collapse, Diffusion Models are generally more robust. This makes them attractive for researchers and developers looking to build reliable generative systems.

Beyond images, the principles behind Diffusion Models are being explored in other domains like audio generation and text synthesis. Their flexibility and effectiveness suggest that we’re only scratching the surface of what they can achieve.

Recurrent Neural Networks (RNNs)

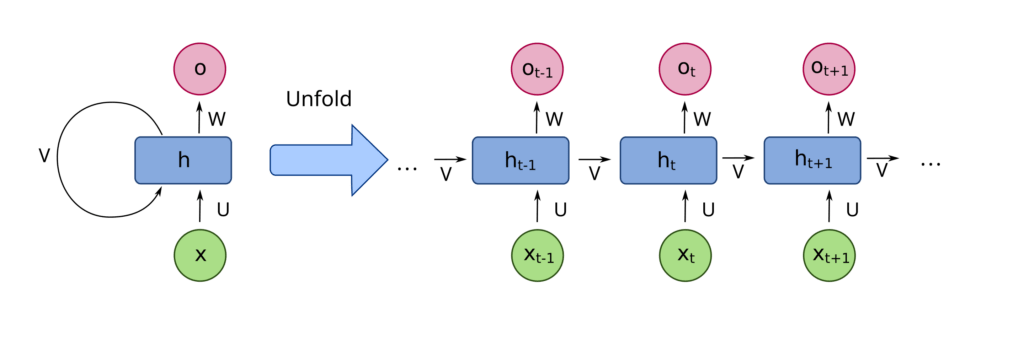

Recurrent Neural Networks have long been the go-to models for sequential data. They’re designed with the idea of “memory” in mind, allowing them to maintain an internal state that captures information about previous inputs in a sequence. This makes RNNs particularly well-suited for tasks where the order and context of data points are crucial, such as time series forecasting, language modeling, and speech recognition. However, standard RNNs come with their own set of challenges. One major issue is the difficulty in learning long-term dependencies due to problems like vanishing or exploding gradients during training. This means that while they can handle short sequences effectively, they might struggle when the important information is spread out over longer sequences.

To overcome this, advanced variants like Long Short-Term Memory networks (LSTMs) and Gated Recurrent Units (GRUs) were developed. These architectures introduce gating mechanisms that control the flow of information, allowing the network to retain relevant information over longer periods and forget what’s not important. This significantly improves their ability to model long-range dependencies.

In practical applications, RNNs and their variants have been widely used in areas like language translation, where understanding the context of entire sentences is crucial, and in speech-to-text systems that need to process audio signals over time. Even though newer models like Transformers have taken center stage in many NLP tasks, RNNs remain valuable tools, especially in scenarios where data is inherently sequential and the model needs to process inputs one at a time.

Performance on Different Tasks

| Task | Transformers | Diffusion Models | RNNs |

|---|---|---|---|

| Language Modeling | Excellent Transformers excel due to their self-attention mechanism, capturing long-range dependencies and context over entire sequences, leading to high-quality text generation. | Not Typically Used Diffusion Models are not designed for discrete data like text, making them unsuitable for language modeling tasks. | Good for Short Sequences RNNs can handle sequential text but struggle with long-term dependencies, making them less effective for longer texts compared to Transformers. |

| Machine Translation | Excellent Capable of processing entire sentences simultaneously, Transformers provide accurate translations by understanding context and relationships between words in both languages. | Not Typically Used Not suitable for translation tasks as they don’t effectively model the discrete sequential nature of language. | Good for Short Sentences RNNs can translate short sentences but may lose context in longer ones due to vanishing gradients and limited ability to capture long-range dependencies. |

| Image Tasks: | Good Vision Transformers can generate images by treating image patches as sequences, but they are generally less effective than Diffusion Models in this domain. | State-of-the-Art Diffusion Models lead in image generation, producing high-fidelity images through iterative denoising, surpassing other generative models in quality and diversity. | Limited Use RNNs are not typically used for image generation due to their sequential nature, which doesn’t align well with the spatial dimensions of images. |

| Speech Recognition | Very Good Transformers process entire audio sequences, capturing long-term dependencies, leading to accurate transcriptions. | Emerging Applications Diffusion Models are being explored for audio tasks but are not yet standard for speech recognition. | Excellent with LSTM/GRU RNNs, particularly LSTM and GRU variants, excel by handling temporal dependencies in audio data effectively. |

| Generative Modeling | Good Transformers generate text and other sequential data effectively but are less dominant in image generation compared to Diffusion Models. | State-of-the-Art Diffusion Models excel in generating high-quality images and are expanding into audio and other continuous data domains. | Limited Use RNNs are less effective for generative modeling, especially for long sequences, due to difficulty in maintaining coherence and capturing global structures. |

Applications in the Real World

Transformers

- Machine Translation: Transformers have set new standards in translating text from one language to another. By understanding the context and relationships between words in a sentence, they provide translations that are both accurate and natural-sounding.

- Text Summarization: They can distill lengthy documents into concise summaries, capturing the main ideas without losing essential details. This is incredibly useful for quickly digesting news articles, research papers, or reports.

- Question-Answering Systems: Transformers power many AI assistants and chatbots by accurately interpreting questions and retrieving relevant answers. They excel at understanding complex queries and providing precise information from vast datasets.

- Some Computer Vision Tasks: By adapting their self-attention mechanism to images, Transformers have made strides in tasks like image classification and object detection. They analyze visual data to recognize patterns and features, improving accuracy in computer vision applications.

Diffusion Models

- High-Quality Image Generation: Diffusion Models can create incredibly realistic images from scratch. Starting with random noise, they iteratively refine it to produce visuals that are often indistinguishable from real photographs, benefiting fields like art and entertainment.

- Image Editing and Inpainting: They excel at filling in missing parts of an image or altering existing content seamlessly. This is useful for tasks like restoring old photographs, removing unwanted objects, or even changing styles within an image.

- Super-Resolution: Diffusion Models enhance low-resolution images by adding fine details, effectively increasing their clarity and sharpness. This is valuable for improving image quality in photography, security footage, or medical imaging.

- Audio Synthesis: Beyond images, Diffusion Models are being applied to generate realistic audio, including speech and music. They produce high-fidelity sounds that can be used in virtual assistants, game development, and content creation.

Recurrent Neural Networks (RNNs)

Music Generation: They can compose original music by learning patterns and structures from existing musical pieces. Musicians and producers use RNNs to generate new melodies and harmonies, exploring creative possibilities in composition.

Time Series Prediction: RNNs are adept at forecasting future events by analyzing sequential data over time. This makes them invaluable in finance for predicting stock prices, in meteorology for weather forecasting, and in supply chain management for demand planning.

Speech Recognition: By processing audio signals one step at a time, RNNs can transcribe spoken words into text accurately. They’re the backbone of voice-controlled applications like virtual assistants, transcription services, and voice-activated devices.

Sentiment Analysis: RNNs analyze sequences of words to determine the underlying sentiment in text, such as identifying whether a customer review is positive or negative. This helps businesses understand customer feedback and monitor public opinion.

Conclusion

Transformers, Diffusion Models, and RNNs each bring unique strengths to machine learning. Transformers excel at understanding long-range dependencies in language, making them ideal for tasks like machine translation and text summarization. Diffusion Models have set new standards in image generation and editing through their innovative denoising approach. RNNs remain valuable for sequential data tasks such as time series prediction and speech recognition, especially when computational resources are limited.

Choosing the right model depends on your specific task, data, and available resources. As we’ve discussed in our previous blogs, understanding each architecture’s strengths and limitations is crucial for informed decision-making in your projects. The field continues to evolve, and staying updated on these advancements will help you leverage the best tools for your machine learning challenges.