Llama 3.2: Revolutionizing AI with Open-Source Multimodals.

Introduction to Llama 3.2

Meta’s release of Llama 3.2 on September 25, 2024, marks a pivotal moment in the evolution of artificial intelligence. Building upon the success of its predecessors, Llama 3.2 introduces groundbreaking multimodal capabilities, allowing the model to process and understand both text and images with remarkable proficiency. This latest iteration represents more than just an incremental update; it’s a paradigm shift in open-source AI technology. Llama 3.2 is designed to democratize access to advanced AI capabilities, offering a range of models that cater to diverse computing environments – from resource-constrained edge devices to powerful cloud platforms.

What sets Llama 3.2 apart is its commitment to openness and accessibility. As an open-source model, it provides unprecedented transparency and flexibility, allowing developers and researchers to peek under the hood, customize the model for specific use cases, and contribute to its ongoing development. This approach stands in stark contrast to many closed-source models, fostering a collaborative ecosystem that accelerates innovation in the AI field.

Moreover, Llama 3.2 represents Meta’s vision for the future of AI – one where advanced capabilities are not limited to tech giants with vast resources but are available to a broader community of developers, startups, and businesses. This democratization of AI technology has the potential to spark a new wave of innovation across various industries, from healthcare and education to e-commerce and entertainment.

Key Features of Llama 3.2

Vision LLMs (11B and 90B)

Llama 3.2 introduces two powerful vision-capable models that are set to redefine the landscape of multimodal AI:

- 11B Parameter Model:

- Designed for a wide range of image understanding tasks

- Balances performance and computational efficiency

- Suitable for businesses and developers looking to implement advanced visual AI without requiring extensive computational resources

- 90B Parameter Model:

- Offers state-of-the-art reasoning capabilities for complex visual and textual inputs

- Ideal for research institutions and enterprises requiring the highest level of AI performance

- Capable of handling intricate multimodal tasks that combine deep language understanding with sophisticated visual analysis

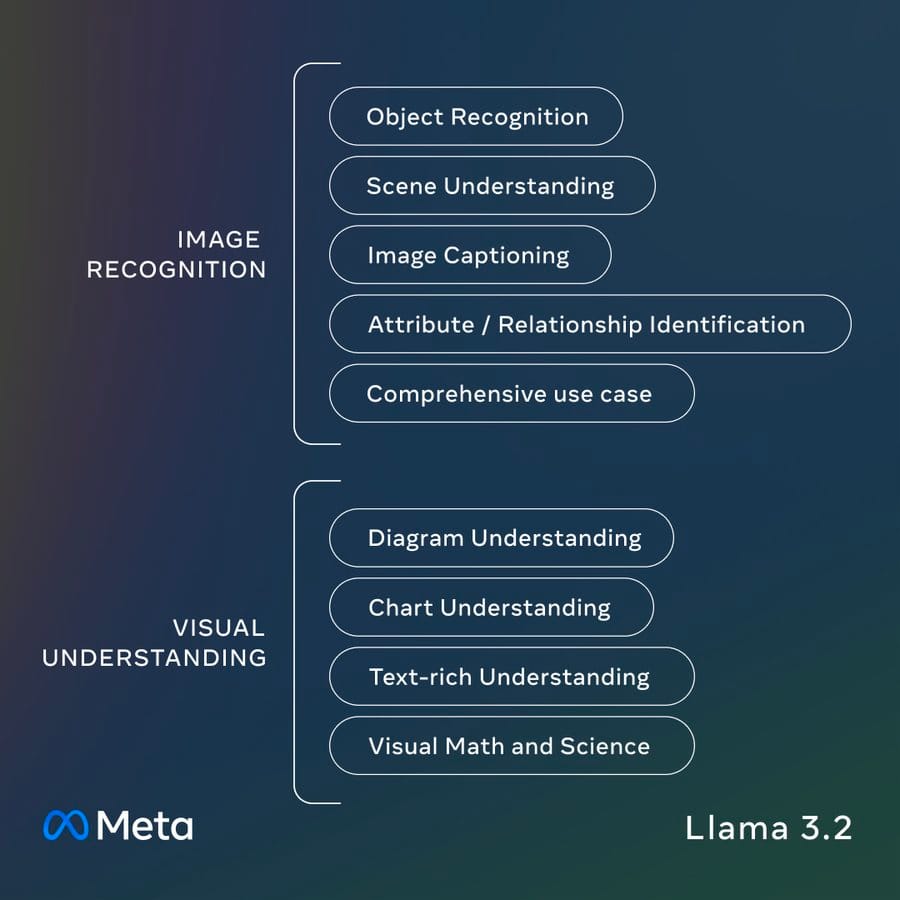

These models support a variety of sophisticated image reasoning use cases:

- Document-level Understanding: Llama 3.2 can analyze complex documents containing both text and visual elements such as charts, graphs, and diagrams. This capability is particularly valuable in fields like financial analysis, scientific research, and business intelligence.

- Image Captioning: The models can generate accurate and contextually relevant captions for images, enhancing accessibility and enabling advanced image search capabilities.

- Visual Grounding Tasks: Llama 3.2 excels at tasks that require linking language descriptions to specific parts of an image. For example, it can identify and locate objects within an image based on natural language queries.

- Scene Understanding: The models can comprehend complex visual scenes, describing the relationships between objects, people, and the environment.

- Visual Question Answering: Users can ask questions about images, and Llama 3.2 can provide accurate answers based on its understanding of the visual content.

These capabilities open up a wide range of applications across industries, from enhancing e-commerce product searches to assisting in medical image analysis.

Lightweight Text Models (1B and 3B)

In addition to the larger vision models, Llama 3.2 introduces two lightweight models optimized for edge computing and mobile applications:

- 1B Parameter Model:

- Highly efficient for on-device processing

- Ideal for real-time applications on smartphones and IoT devices

- Enables privacy-preserving AI by keeping data processing local

- 3B Parameter Model:

- Offers a balance of performance and efficiency

- Suitable for more complex on-device tasks without compromising on speed

- Capable of handling a wider range of language tasks compared to the 1B model

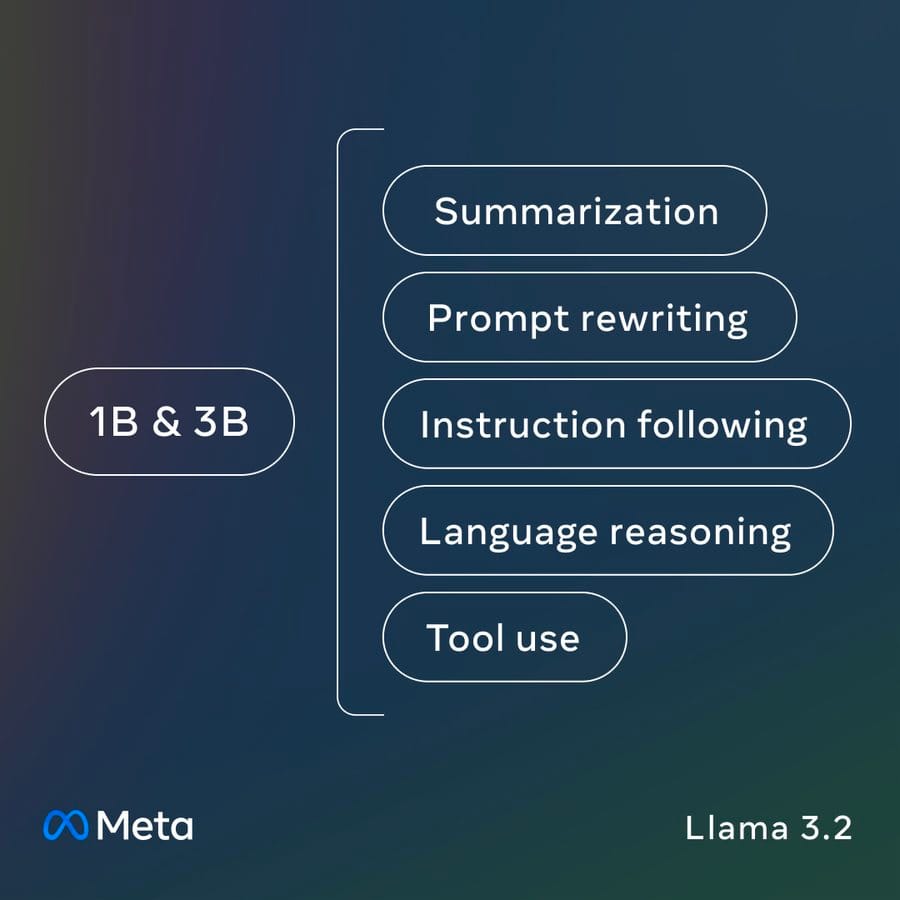

These lightweight models excel in several key areas:

- Multilingual Text Generation: Despite their small size, these models can generate coherent and contextually appropriate text in multiple languages, making them ideal for global applications.

- Tool Calling Abilities: The models can interface with external tools and APIs, enabling them to perform actions beyond mere text generation, such as scheduling appointments or retrieving information from databases.

- On-device Tasks: They’re optimized for common mobile and edge computing tasks, including:

- Text summarization

- Instruction following

- Sentiment analysis

- Named entity recognition

- Language translation

The ability to perform these tasks efficiently on-device represents a significant advancement in edge AI, enabling new categories of applications that prioritize privacy, low-latency, and offline functionality.

Edge AI and Mobile Compatibility

A standout feature of Llama 3.2 is its optimization for edge and mobile devices, which brings advanced AI capabilities to a wider range of hardware:

- Support for Qualcomm and MediaTek Hardware: This ensures compatibility with a wide range of mobile devices, from high-end smartphones to more affordable models.

- Optimization for Arm Processors: Leveraging the ubiquity of Arm-based chips in mobile devices, Llama 3.2 ensures efficient performance across a broad spectrum of hardware configurations.

- Extended Context Length: All models support a context length of up to 128K tokens, allowing for processing of longer documents and more complex conversations without losing context.

- BFloat16 Numerics: This numerical format offers a balance between the precision of 32-bit floating-point and the efficiency of 16-bit formats, enabling faster computation without significant loss in accuracy.

These optimizations collectively enable:

- Real-time AI processing on smartphones and tablets

- Enhanced privacy by keeping sensitive data on-device

- Reduced reliance on cloud connectivity for AI tasks

- Lower latency for time-sensitive applications

- Extended battery life through efficient processing

The mobile compatibility of Llama 3.2 paves the way for a new generation of AI-powered mobile applications, from advanced virtual assistants to real-time language translation and image recognition tools.

Technical Advancements

Vision Model Architecture

Llama 3.2’s vision capabilities are built on a novel architecture that seamlessly integrates visual understanding with language processing:

- Adapter Weights:

- Integrate pre-trained image encoders into existing language models

- Allow for efficient transfer of visual information into a format the language model can process

- Enable the model to “see” and “understand” images in the context of language

- Cross-attention Layers:

- Facilitate the alignment of image and language representations

- Enable the model to attend to relevant parts of an image when processing text, and vice versa

- Allow for sophisticated reasoning that combines visual and textual information

- Post-training Alignment:

- Employs supervised fine-tuning, rejection sampling, and direct preference optimization

- Utilizes synthetic data generation to enhance question-answering capabilities on domain-specific images

- Incorporates safety mitigation data to ensure responsible AI outputs

This architecture allows Llama 3.2 to perform complex tasks that require a deep understanding of both visual and textual information, such as answering questions about charts, describing the contents of images, and even reasoning about spatial relationships within visual scenes.

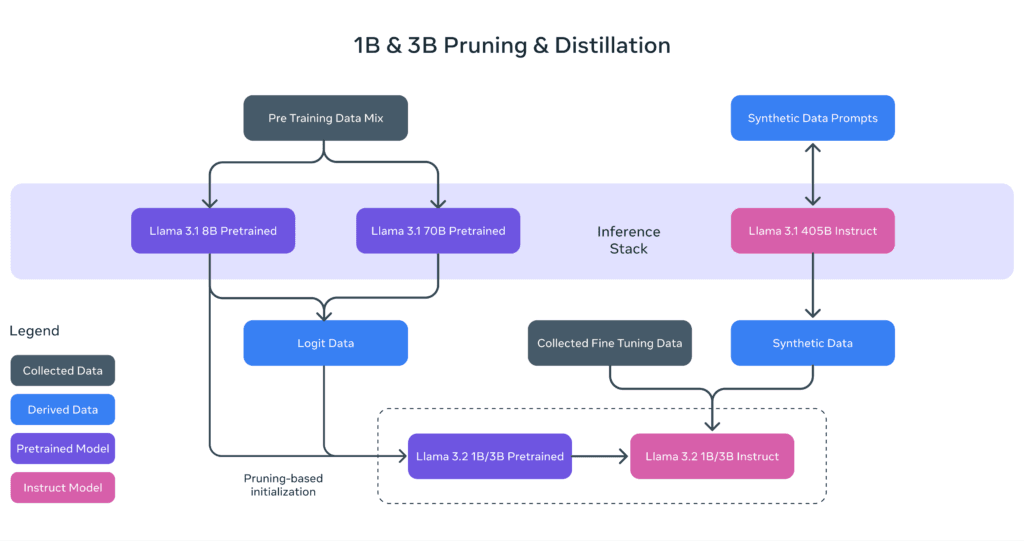

Pruning and Distillation Techniques

To create the efficient lightweight models (1B and 3B), Meta employed advanced pruning and distillation techniques:

- Structured Pruning:

- Applied to the Llama 3.1 8B model in a single-shot manner

- Systematically removes less important parts of the network

- Adjusts the magnitude of weights and gradients to maintain performance

- Results in smaller, more efficient models that retain much of the original network’s capabilities

- Knowledge Distillation:

- Utilizes larger Llama 3.1 models (8B and 70B) as “teachers”

- Incorporates logits from these larger models into the pre-training stage of smaller models

- Allows smaller models to learn from the more sophisticated reasoning of larger models

- Enables 1B and 3B models to achieve performance levels closer to their larger counterparts

- Post-training Optimization:

- Employs supervised fine-tuning (SFT), rejection sampling (RS), and direct preference optimization (DPO)

- Scales context length support to 128K tokens while maintaining quality

- Utilizes synthetic data generation with careful processing and filtering

- Optimizes for high-quality performance across various capabilities like summarization, rewriting, and tool use

These techniques allow the lightweight models to punch above their weight, offering impressive capabilities despite their small size. This is crucial for enabling advanced AI features on resource-constrained devices, opening up new possibilities for edge AI applications.

Use Cases and Applications

Llama 3.2’s versatility and advanced capabilities open up a myriad of applications across various industries:

- Customer Service: Llama 3.2 revolutionizes customer service through AI-powered chatbots with enhanced comprehension of customer inquiries. Voice-activated assistants can now manage complex, multimodal interactions, combining text, voice, and visual inputs. The model enables real-time language translation, breaking down barriers in international customer support. This advancement allows for more natural, efficient, and personalized customer interactions. Ultimately, it leads to improved customer satisfaction and reduced workload for human support agents.

- Healthcare: In healthcare, Llama 3.2 enables comprehensive analysis of medical images in conjunction with patient records, potentially improving diagnostic accuracy. The model assists in interpreting various medical imaging techniques, including X-rays, MRIs, and CT scans. Its ability to process both visual and textual data facilitates drug discovery by analyzing molecular structures alongside research papers. This integration of multimodal data analysis could lead to faster, more accurate diagnoses and treatment plans. The technology has the potential to significantly enhance patient care and medical research outcomes.

- E-commerce: Llama 3.2 enhances e-commerce platforms with visual search capabilities, allowing customers to find products by uploading images. The model provides personalized product recommendations based on user preferences and visual style, improving the shopping experience. Virtual try-on experiences for clothing and accessories become more sophisticated, leveraging the model’s image processing capabilities. These features can increase customer engagement, reduce return rates, and boost sales. The overall result is a more intuitive and personalized online shopping experience.

- Education: In education, Llama 3.2 enables the creation of personalized learning experiences that adapt to individual student needs. Interactive textbooks enhanced with AI-powered explanations and visualizations make complex concepts more accessible. The model facilitates automated grading and feedback systems for essays and problem sets, saving time for educators. This technology can help address diverse learning styles and paces, potentially improving educational outcomes. It also opens up new possibilities for distance learning and self-paced education.

- Augmented Reality (AR): Llama 3.2 significantly enhances AR applications with improved object recognition and tracking capabilities. The model enables real-time translation of text in AR environments, breaking down language barriers in various settings. Intelligent AR assistants powered by Llama 3.2 can provide context-aware information, enhancing user experiences in navigation, tourism, and technical support scenarios. These advancements make AR more practical and useful in everyday life and professional settings. The technology has the potential to transform how we interact with our environment and access information in real-time.

Llama Stack and Ecosystem Support

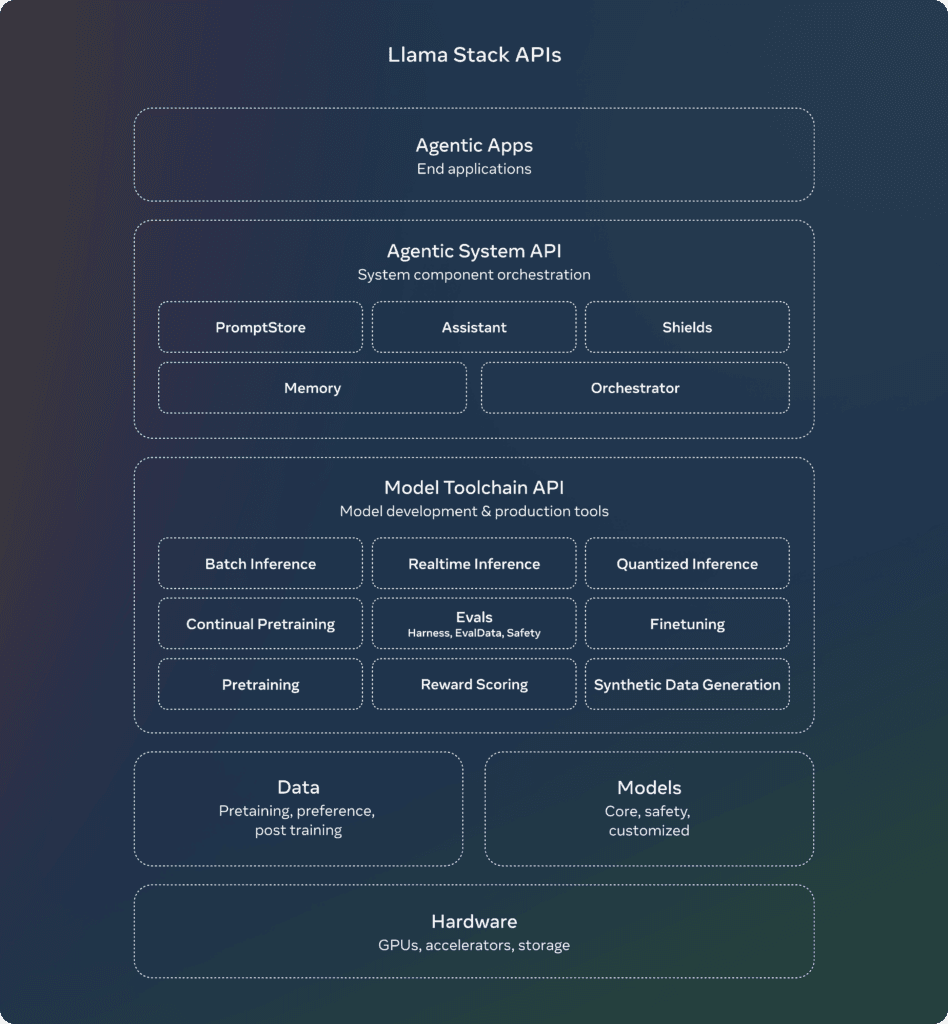

Meta is introducing the Llama Stack to simplify development and deployment of Llama 3.2 models:

- Llama CLI (Command Line Interface):

- Enables easy building and configuration of Llama Stack distributions

- Provides a user-friendly interface for developers to interact with Llama models

- Supports various operations like model selection, fine-tuning, and deployment

- Client Code in Multiple Languages:

- Python: For data science and backend development

- Node.js: For web and server-side applications

- Kotlin: For Android app development

- Swift: For iOS app development

- Ensures broad accessibility across different development ecosystems

- Docker Containers:

- Pre-configured environments for Llama Stack Distribution Server

- Containers for Agents API Provider

- Facilitates easy deployment and scaling of Llama-based applications

- Multiple Distribution Options:

- Single-node distribution via Meta’s internal implementation and Ollama

- Cloud distributions through partnerships with AWS, Databricks, Fireworks, and Together

- On-device distribution for iOS implemented via PyTorch ExecuTorch

- On-premises distribution supported by Dell Technologies

- Provides flexibility for various deployment scenarios, from individual developers to large enterprises

- Standardized API:

- Unified interface for inference, tool use, and Retrieval-Augmented Generation (RAG)

- Simplifies integration of Llama models into existing applications and workflows

- Ecosystem Partnerships: Meta has collaborated with over 25 companies to ensure broad platform support, including:

- Cloud providers: AWS, Google Cloud, Microsoft Azure

- Hardware manufacturers: AMD, NVIDIA, Intel

- Enterprise solutions: IBM, Oracle Cloud, Snowflake

- Mobile chip designers: Arm, MediaTek, Qualcomm These partnerships ensure that Llama 3.2 can be easily deployed and utilized across a wide range of hardware and software environments.

- Open-Source Community Engagement:

- Encourages contributions and feedback from developers worldwide

- Fosters a collaborative ecosystem for continuous improvement of Llama models

The Llama Stack and its extensive ecosystem support demonstrate Meta’s commitment to making advanced AI accessible and easy to implement. By providing these tools and partnerships, Meta is enabling developers, researchers, and businesses of all sizes to leverage the power of Llama 3.2 in their applications and services.

Comparison with Competitors

Llama 3.2 demonstrates competitive performance against leading models in the AI landscape:

- Vision Tasks:

- Outperforms Claude 3 Haiku and GPT4o-mini on various visual understanding benchmarks

- Excels in tasks such as image captioning, visual question answering, and document analysis

- Language Tasks:

- The 3B model surpasses Gemma 2 2.6B and Phi 3.5-mini on:

- Instruction following

- Text summarization

- Prompt rewriting

- Tool use capabilities

- The 1B model is competitive with Gemma in its size class, offering strong performance for its compact size

- The 3B model surpasses Gemma 2 2.6B and Phi 3.5-mini on:

- Multimodal Capabilities:

- Offers integrated text and image understanding, setting it apart from many text-only models

- Provides a unified model for tasks that previously required separate vision and language models

- Efficiency:

- Lightweight models (1B and 3B) offer competitive performance for on-device applications

- Optimized for edge computing, potentially outperforming larger models in low-resource environments

- Customizability:

- As an open-source model, Llama 3.2 offers greater flexibility for fine-tuning and adaptation compared to closed-source competitors

- Accessibility:

- Freely available for research and commercial use, unlike many proprietary models

- Supported across a wide range of hardware and software platforms

- Ethical Considerations:

- Incorporates built-in safety measures and responsible AI practices

- Transparent development process allows for community oversight and improvement

While Llama 3.2 shows impressive performance across various benchmarks, it’s important to note that the AI landscape is rapidly evolving. Different models may excel in specific niches or applications. The open nature of Llama 3.2 allows for continuous improvement and adaptation to new challenges, potentially closing gaps with closed-source models over time.

Responsible AI and Safety Measures

Meta has implemented robust safety measures to ensure responsible AI development and usage with Llama 3.2. These include the introduction of Llama Guard 3, available in both 11B Vision and optimized 1B versions, designed to filter input prompts and output responses for enhanced safety in multimodal interactions. The company provides a comprehensive Responsible Use Guide and employs safety-focused training methods, including multiple rounds of alignment through supervised fine-tuning, rejection sampling, and direct preference optimization. Synthetic data generation is carefully processed and filtered to maintain high-quality outputs while adhering to safety standards.

Availability and Open-Source Benefits

Llama 3.2’s open-source nature offers significant advantages to the AI community. The model is widely accessible, available for download on llama.com and Hugging Face, and supported by major cloud and hardware providers. This accessibility democratizes cutting-edge AI technology, allowing developers and researchers to easily integrate Llama 3.2 into their workflows. The open-source approach enables customization and fine-tuning for specific use cases, fostering innovation across industries. It also allows for community scrutiny, potentially leading to faster improvements and bug fixes. From an educational standpoint, Llama 3.2 provides valuable insights into large language model architecture and training, advancing AI research and education. The cost-efficiency of open-source models makes advanced AI capabilities more accessible to smaller companies and startups, leveling the playing field. Additionally, the collaborative environment encouraged by open-source development can accelerate innovation in AI. Finally, this approach promotes transparency and ethical development of AI technologies, addressing concerns about bias, fairness, and accountability in AI systems.

Future of AI: Llama 3.2’s Impact

Looking to the future, Llama 3.2 is set to have a profound impact on AI development and application. It promises to democratize AI by making advanced capabilities accessible to a broader range of developers and organizations. The integration of multimodal processing in a single model paves the way for more intuitive and versatile AI applications across industries. Advancements in edge AI, driven by the lightweight models, will likely spur innovation in IoT, smart home technology, and mobile applications. The model’s ability to run on-device enables more personalized AI experiences while maintaining user privacy, potentially expanding AI accessibility in emerging markets with limited internet connectivity. Furthermore, the open-source approach of Llama 3.2 promotes transparency and community oversight, potentially leading to more ethical and unbiased AI systems. As these technologies become more accessible and capable, we may see new paradigms of human-AI collaboration emerge, enhancing productivity and problem-solving capabilities across various fields, from scientific research to creative endeavors.