Why DeepSeek Janus-Pro 1B and 7B Outperforms OpenAI DALL-E 3 in Image Generation Tests

Introduction to DeepSeek Janus-Pro 1B and 7B

DeepSeek AI has just introduced Janus-Pro 7B under its DeepSeek license. This is a multimodal AI model that beats DALL-E 3 and Stable Diffusion in key tests. It follows DeepSeek’s earlier DeepSeek R1 model, known for strong reasoning. DeepSeek also released a research paper explaining Janus-Pro’s design and abilities. Though a cyberattack happened during its launch, Janus-Pro gained quick attention due to its power and open-source design. This report gives a full analysis of Janus-Pro 1B and 7B, covering their design, training steps, how they compare to others, special traits, limits, and where they might be used in real-world settings.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Architecture of Janus-Pro 1B and 7B

Janus-Pro is a new autoregressive system that joins multimodal understanding and creation. It fixes past problems by splitting visual encoding into separate paths but still uses one transformer for processing. This split lowers conflict between how the visual encoder understands and creates images, and it also makes the system more flexible. Unlike older models that use the same encoder for all tasks, Janus-Pro uses a split design, which boosts both speed and results. The model was built and tested with HAI-LLM, a lightweight but strong distributed training setup made on PyTorch. This approach supports better performance in varied tasks and simpler expansions.

Key Architectural Components

Dual Encoders: Janus-Pro uses two encoders for multimodal understanding and text-to-image creation, reducing interference and improving each task. The understanding encoder uses the SigLIP method to draw out meaning from images, while the generation encoder applies a VQ tokenizer to turn images into discrete bits of data.

Centralized Decoding Module: A single decoder merges data from both encoders to provide accurate results in many fields.

Parameter Efficiency: This flexible setup, with 1B or 7B parameter sizes, adapts well to different computing needs and budgets.

Model Specifications:

Context Window: 4096 tokens

Janus-Pro 1B: Training Process

Janus-Pro uses a three-stage training process:

Pretraining the Adaptors: In this step, the model learns image adaptors and heads with data like ImageNet. The pretraining at this early step is key to helping Janus-Pro more effectively grasp how pixels closely link together.

Unified Pretraining: In this step, different data types are combined to prepare the model for tasks, removing single-purpose data limits.

Supervised Fine-Tuning: Here, the model is polished using a careful data ratio mix of 5:1:4 (multimodal, text, and text-to-image) sources.

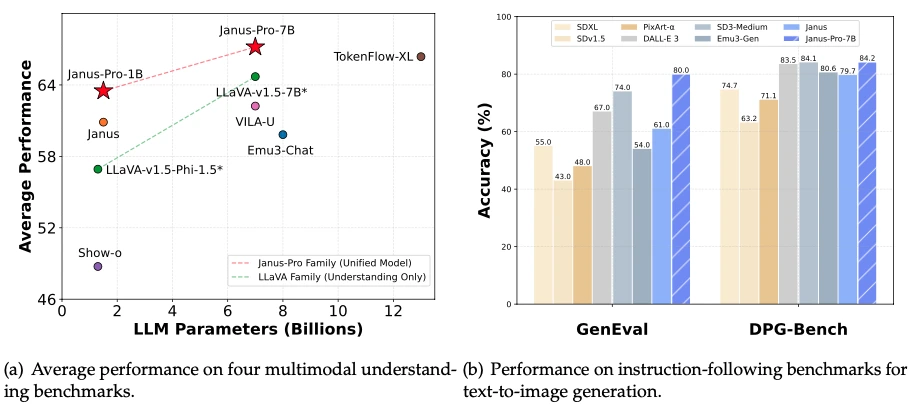

Janus-Pro 1B: Comparison with Other Models

Janus-Pro 7B has proven stronger than other language models, such as DALL-E 3 and Stable Diffusion, in many key tests.

Key Advantages:

Decoupled Visual Encoding: It allows specialized optimization, reduces conflicts, and boosts output quality. This is a shift from older multimodal systems that rely on shared encoders, which leads to better speed and results.

Optimized Training Strategy: It leads to faster and effective learning.

Expanded and High-Quality Dataset: It improves stability and output accuracy. The dataset has 72 million aesthetic samples and 90 million multimodal understanding entries which ensures breadth.

Scalability: It raises the model’s power to manage inputs and different tasks.

Cost-Effectiveness: Janus-Pro was built at a much lower total cost than its American rivals.

Qualitative Comparisons

Although the table shows Janus-Pro 7B ahead on some tests, we must also look at other factors. For instance, in a direct match with DALL-E 3 run by Analytics Vidhya, Janus-Pro 7B did better at explaining memes shortly and accurately. But DALL-E 3 shined at tasks needing deeper context and background knowledge, like finding an image’s source or giving its history. These findings highlight each model’s strengths for different unique use cases or requirements.

Unique Features and Capabilities

Multimodal Understanding and Generation: Janus-Pro does well at both reading and producing images from text, so it fits tasks like visual Q&A, image captions, and creative content creation in many diverse fields.

Advanced Instruction Following: The model shows a strong skill for following tricky, packed prompts, catching tiny details and fine points in its created images. This power to work with dense prompts and precise meaning makes it stand out and suits it for tough real-world tasks where accuracy is very important indeed.

Open-Source Nature: Janus-Pro is open-source, which draws community support. It lets researchers and developers widely explore, modify, and enhance it for varied uses.

Fine-tuning: Janus-Pro lets users refine the model on custom data, so they can tailor it to fields or tasks.

Limitations and Drawbacks

Limited Input Resolution: Image analysis is capped at 384×384 pixels, which might hurt results in detailed tasks like OCR. This limit can be a problem for fields that need high-resolution analysis, such as medical scans or satellite pictures. Thus, it may not fit tasks requiring sharp image details or clarity.

Fine Detail Generation: Though the model’s images are semantically strong, they might miss small details because of low resolution and the vision tokenizer’s reconstruction losses. This can be a downside where exact, finely rendered output is key, such as making lifelike art or producing images for scientific displays. These fields often demand high fidelity and detailed visual accuracy.

Janus-Pro 1B: Potential Applications and Use Cases

Content Creation: Janus-Pro can make high-quality images for many creative uses, like ads, illustrations, and concept art. Its knack for handling prompts and producing images with strong meaning makes it a helpful resource for artists and designers. They can rely on it for diverse projects.

Image Analysis and Understanding: Its multimodal skills can help with tasks like image captions, visual Q&A, and object spotting. This can be used in many areas, such as online shopping, social platforms, and content checks, boosting overall workflow efficiency.

Human-Computer Interaction: Janus-Pro could make human-computer communication smoother by letting people use pictures and text in a more natural way. For example, it might power AI helpers that read and react to visual signals or offer active learning tools mixing text and images. Such systems can enhance user engagement in various settings worldwide.

Janus-Pro 1B: Conclusion

Janus-Pro 1B and 7B are big steps forward in multimodal AI, giving a strong, flexible way to understand and create images. Their split design, better training plan, and larger dataset drive high marks on many tests. While they have some flaws, the open-source approach and wide uses make them helpful for researchers, developers, and creators across many different research areas globally.

Janus-Pro’s arrival shows a possible change in the AI world. DeepSeek, a newer team, has managed to rival well-known names like OpenAI and Stability AI by building a strong model at less expense. This might spark more competition and invention, possibly making advanced AI models easier for everyone. These shifts may bring ideas and broaden AI’s real-world use.

Yet, stronger and more open AI models like Janus-Pro bring ethical questions. As they grow smarter, it’s vital to face problems tied to bias, false data, and misuse. Making sure these potent AI tools are built and used carefully is key to reaping their gains and reducing possible harms. Their availability needs rules and responsibility.

As DeepSeek keeps improving Janus-Pro, it may become a top player in the growing multimodal AI field. Its next stages and uses in many areas will likely affect how we engage with AI for years. This progress might change our daily tools, research methods, and even creative pursuits.

FAQs

1.Is Janus-Pro 7B open-source, and can it be customized?

Yes, Janus-Pro is open-source, allowing researchers and developers to modify and fine-tune it for specific tasks or industries.

2. How does Janus-Pro 1B compare to DALL-E 3 and Stable Diffusion?

Janus-Pro 7B outperforms DALL-E 3 and Stable Diffusion in benchmarks, especially in tasks like meme explanations and prompt-following accuracy. However, DALL-E 3 excels in tasks requiring deep contextual knowledge (e.g., image history).

3. What ethical concerns does Janus-Pro 1B raise?

Like all advanced AI models, Janus-Pro risks bias, misinformation, and misuse. DeepSeek emphasizes responsible development and calls for ethical guidelines to mitigate harm.