Introduction to the Switch Transformer Model: Pioneering Scalable and Efficient NLP

The Switch Transformer, introduced by Google Research, represents a significant innovation in large-scale Natural Language Processing (NLP). With an impressive 1.6 trillion parameters, this model achieves high performance while keeping computational demands in check. Leveraging a mixture-of-experts (MoE) approach, the Switch Transformer only activates a single expert sub-network for each input, diverging from traditional models that engage all sub-models at once. This selective approach allows it to scale effectively, manage hardware costs, and perform complex NLP tasks with remarkable efficiency and accuracy.

In this blog, we’ll delve into the mechanics behind the Switch Transformer, its open-source framework, real-world applications, challenges, and future directions in AI research. Let’s explore how the Switch Transformer is redefining NLP model design.

Table of Contents

Mechanics of the Switch Transformer: x

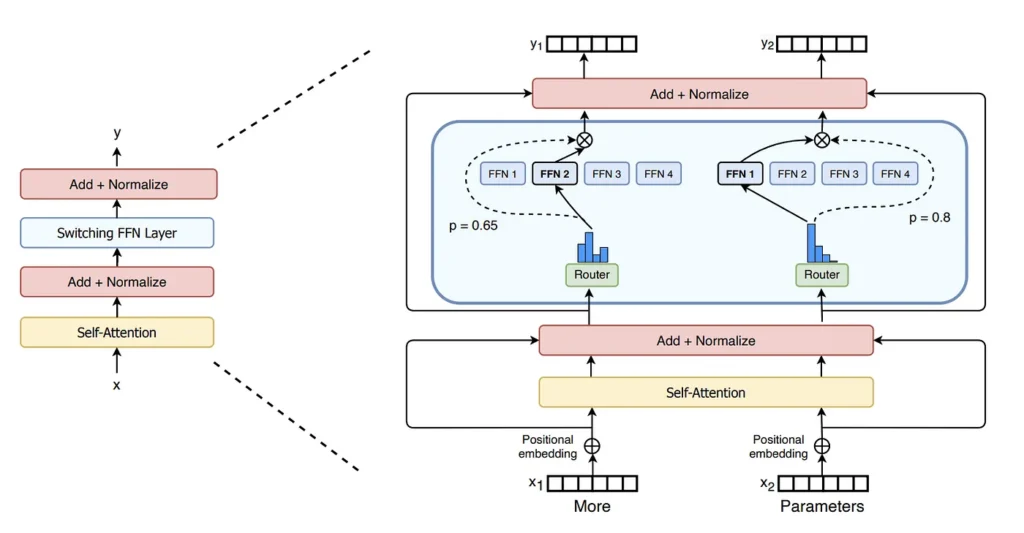

The core innovation in the Switch Transformer lies in its mixture-of-experts (MoE) architecture. In an MoE-based model, instead of activating all parts of the network, only a subset of specialized “experts” process each input. Here’s a closer look at how these components function:

- Expert Layers: In the Switch Transformer, each expert is essentially a smaller Transformer model that processes specific aspects of input data. These experts collectively form a large network capable of handling diverse NLP tasks without the computational burden of running every expert on each input.

- Switch Routing Mechanism: The Switch Transformer incorporates a routing layer that dynamically selects one expert per input token, based on which expert is most relevant to the task. By using a single expert for each input, the model can minimize computational load, making it possible to scale up the model size without a linear increase in hardware demands.

This mechanism allows for efficient scaling by using only the necessary computational resources for each task, which has been especially beneficial for large-scale applications. Recent improvements to Switch Routing address issues such as training instability and difficulties in initialization scaling, which initially posed challenges in large-scale model setups.

Open-Source Accessibility and Available Frameworks

Google has embraced open-source collaboration by releasing the Switch Transformer on T5X, a JAX-based framework. This open-source approach allows researchers worldwide to experiment, modify, and optimize the Switch Transformer architecture across different model sizes. Here’s how this open-source availability benefits the research community:

- T5X and JAX: T5X provides a robust platform for running and experimenting with the Switch Transformer. Built on JAX, a library designed for high-performance machine learning research, T5X allows for dynamic scaling, making it easy to experiment with MoE architectures on both smaller and larger models.

- Mesh-TensorFlow: The model is trained using Mesh-TensorFlow, which supports efficient parallelization across both data and models, enabling researchers to work with massive models more efficiently. This framework simplifies the distribution of computational tasks, so that large-scale experimentation becomes feasible even outside Google’s infrastructure.

- Research and Development Encouragement: By open-sourcing the Switch Transformer, Google encourages researchers to explore how MoE-based models perform on a range of NLP benchmarks, such as the Stanford Question Answering Dataset (SQuAD) and the General Language Understanding Evaluation (GLUE) benchmark.

Real-World Applications and Use Cases

The Switch Transformer has demonstrated competitive performance across various language tasks, outperforming even the T5 model in specific areas with reduced computational resources. Here are some prominent use cases:

- Question Answering and Language Translation: In question answering and translation tasks, the Switch Transformer achieves high accuracy, effectively capturing language nuances while minimizing computation.

- Sentiment Analysis: The model has been used in sentiment analysis applications to gauge customer sentiment with high precision, proving particularly useful for customer service and support scenarios where NLP models interpret human emotions.

- On-Device AI for Resource-Constrained Environments: Given its efficient use of computational resources, some companies are experimenting with Switch Transformer architectures for on-device AI in mobile and embedded systems. This development can bring complex language models to devices with limited hardware, paving the way for real-time NLP applications in low-power environments.

These applications highlight the model’s versatility in handling a wide range of NLP tasks, making it a valuable tool for real-world, resource-efficient NLP applications.

Challenges and Future Directions

While the Switch Transformer model delivers substantial improvements in computational efficiency, the MoE architecture introduces unique challenges:

- Load Imbalance: Since only one expert is activated per input, some experts may become overloaded while others remain underutilized. This uneven distribution can impact the model’s efficiency, leading to load balancing issues that require optimization to resolve.

- Training Stability: Large MoE models often face stability issues during training, especially when operating in reduced-precision environments. Addressing this has involved experimenting with routing protocols and initialization strategies to ensure smoother training across diverse tasks.

Looking ahead, future development may focus on improving the model’s adaptability across different AI tasks. Enhancements may include enabling multimodal learning and cross-lingual capabilities to extend the model’s utility beyond single-language NLP.

To learn in detail about Transformer Architecture check out our Exploring Transformer Architecture: A Comprehensive Guide

Implications for Large-Scale AI Models and the Future of NLP

The Switch Transformer illustrates a potential shift in the design of large-scale AI models by prioritizing modularity and efficiency. The MoE approach serves as a blueprint for the future of scalable NLP models, setting the stage for flexible architectures that can adapt to specific tasks and resource constraints. This direction represents a significant trend in AI research, where scalable, modular models may eventually support:

- Dynamic Optimization: Future transformer architectures may adopt a modular approach, allowing components to be dynamically optimized or activated based on the task requirements, which could further improve computational efficiency.

- Generalizable AI Systems: Modular, expert-driven systems could enhance the adaptability of AI models, leading to more generalized, multi-functional AI capable of handling diverse tasks within a single architecture.

The MoE architecture employed by the Switch Transformer represents an efficient and scalable design that may inspire future innovations, ultimately contributing to the development of more generalizable AI.

Conclusion

The Switch Transformer marks a pivotal advancement in NLP, demonstrating that large-scale language models can achieve exceptional performance without prohibitive computational demands. With its MoE design and selective activation of expert networks, the model sets a new standard for efficiency in AI research. The Switch Transformer’s open-source release on T5X fosters further innovation, while its impressive real-world applications and future potential underscore the model’s significance in the evolving landscape of NLP.

As we look to the future, the Switch Transformer’s architecture signals a shift toward modular, adaptable, and efficient models, a trend likely to shape the next generation of AI. Whether in customer service, on-device AI, or complex NLP tasks, the Switch Transformer exemplifies how strategic model design can drive AI capabilities forward responsibly and sustainably.