Reinforcement Learning: A Full Overview

Reinforcement learning is one of the most effective types of machine learning where an agent learns by making decisions through its interaction with its environment. In other words, it is the learning process as humans learn in real life by trial and error. Think of a child trying to ride a bicycle. He wobbles, falls, gets up, wobbles, falls, gets up, and so on. With every try, they master the art of balancing, steering, and pedaling until eventually, they can ride easily.

Likewise, in RL, an agent learns by taking actions, observing their consequences, and adjusting their behavior to achieve a desired goal. A characteristic differentiating RL from other paradigms of machine learning is that learning largely depends on labeled data or predefined rules.

Key Concepts in Reinforcement Learning

In order to understand how RL works, let’s break down some of the main concepts:

- Agent: The learner and decision-maker in an RL system. It could be a robot learning how to navigate through a maze, or a program learning how to play a game.

- Environment: The world or system that the agent interacts with. It might be a physical environment, such as a room, or it might be a virtual environment, such as a game simulator.

- State: The state of affairs in the environment at any point. For instance, during a chess game, it refers to the current positions of all the pieces on the board.

- Action: Any action taken by an agent which modifies the environment. In the above chess game, an action could be to move a piece.

- Reward: Feedback from the environment indicating how good or bad an action was. Rewards can be positive for desirable actions and negative for undesirable ones. The reward function is extremely important in RL to model how an agent might learn to behave appropriately, and designing effective reward functions is a tough challenge in RL.

- Policy: The strategy or set of rules that the agent follows to choose actions. It maps states to actions, essentially telling the agent what to do in each situation.

- Value Function: Estimates the long-term value of being in a particular state or taking a specific action. It helps the agent determine which actions are likely to lead to the most reward in the future.

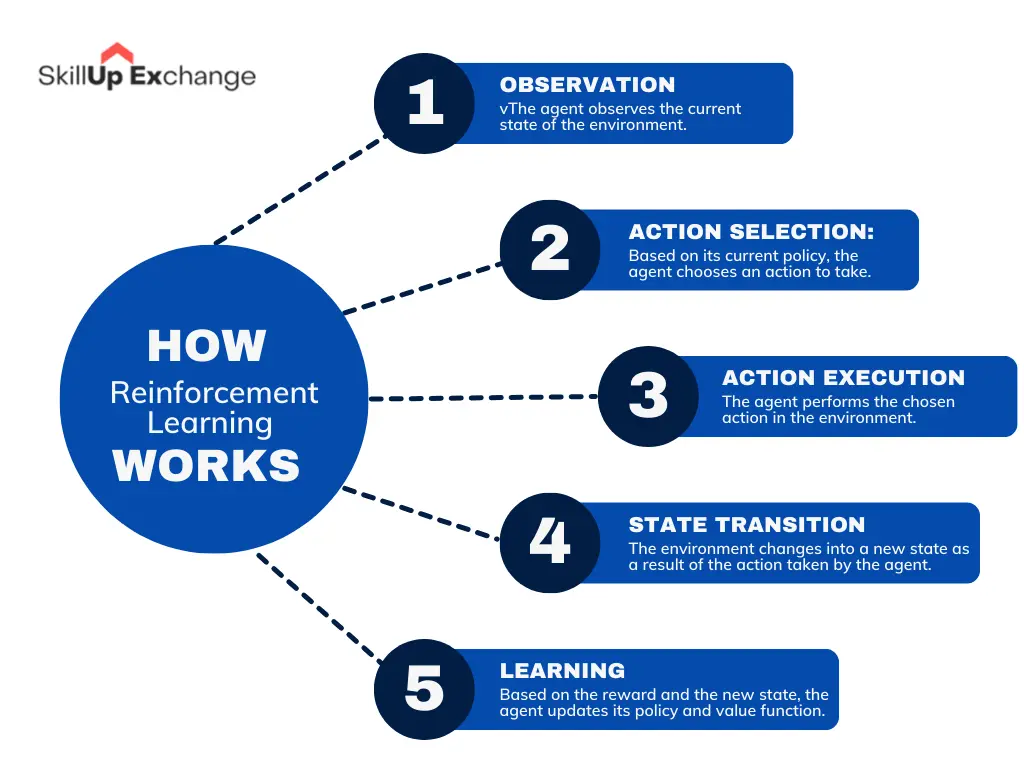

How Reinforcement Learning Works

The central concept of RL is learning by trying. The agent interacts with the environment in a cycle of taking actions, observing the effects and rewards it gets from them, and so on. It can be summarized as follows:

- Observation: The agent observes the current state of the environment.

- Action Selection: Based on its current policy, the agent chooses an action to take.

- Action Execution: The agent performs the chosen action in the environment.

- State Transition: The environment changes into a new state as a result of the action taken by the agent.

- Learning: Based on the reward and the new state, the agent updates its policy and value function.

This loop continues until the agent achieves its goal or until a satisfactory performance level is reached.

The most pertinent challenge within this type of learning system is the exploration-exploitation dilemma. The agent has to weigh off between the necessity of exploring new actions and states in search for newer strategies and exploiting its current knowledge to gain maximal rewards. For instance, take a maze in which a robot learns to navigate through. In the initial steps, it may randomly explore different paths despite some of them eventually pointing into dead ends.

This exploration helps the robot learn about the structure of the maze. But once it finds a path to its goal, it has to exploit this knowledge and repeatedly follow the same path in order to reach the goal as soon as possible. Balancing between exploration and exploitation is critical to learning efficiently in RL.

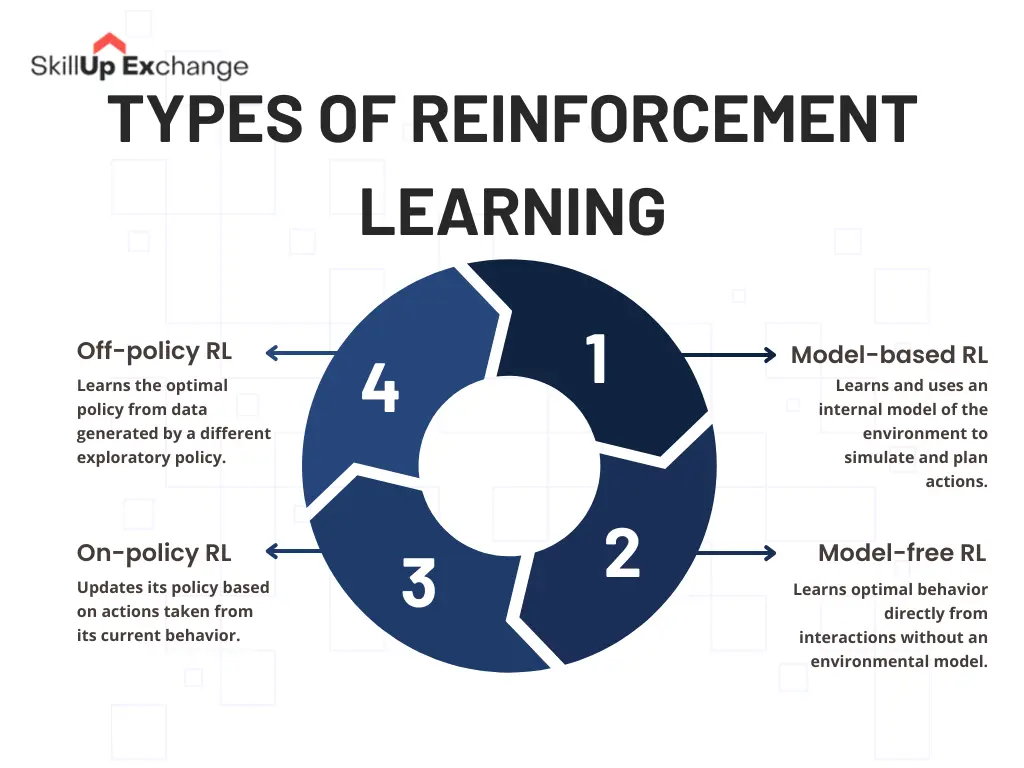

Types of Reinforcement Learning

There are different approaches to RL, each with its strengths and weaknesses:

- Model-based RL: The agent learns a model of the environment, which enables it to predict the consequences of its actions and plan accordingly. This approach is more efficient but requires a good understanding of the environment. For example, in a self-driving car scenario, a model-based RL agent would learn the rules of the road and the physics of car movement to predict how its actions will affect its position and speed.

- Model-free RL: The agent learns directly from experience without building a model of the environment. This approach is more flexible but requires more data to learn effectively. Consider a robot learning to play a video game. A model-free RL agent would learn by playing the game repeatedly and observing the outcomes of its actions without explicitly modeling the game’s internal mechanics.

- On-policy RL: An agent learns from its experience when following its current policy. Examples include SARSA, which is an on-policy RL algorithm. It learns to form an optimum policy to pick the best among all possible next actions in a state and take the action chosen by the current policy.

- Off-policy RL: The agent learns from actions taken by a different policy, which lets it explore a wider range of behaviors. Q-learning is the most well-known example of an off-policy RL algorithm. It learns an optimal policy by estimating the value of taking a given action in a given state, regardless of the current policy being followed.

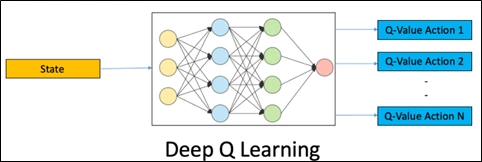

Deep Q-Networks (DQNs)

Deep Q-networks (DQNs) are a powerful type of RL that uses deep neural networks to approximate the Q-function. The Q-function is a mathematical function that estimates the expected future rewards for taking a particular action in a given state. By using DQNs, agents can handle complex environments with many different states and actions.

DQNs address the challenges of traditional Q-learning by incorporating deep neural networks to approximate the Q-function. This allows DQNs to handle high-dimensional state spaces and learn complex relationships between states, actions, and rewards.

To further improve stability and performance, DQNs often utilize two key techniques:

- Experience Replay: DQNs store previous experiences in the memory buffer and randomly sample from this buffer to update the network. This breaks the correlation between consecutive experiences and helps stabilize the learning process.

- Target Network: DQNs employ two neural networks. One is for selecting the action, and another is for the estimation of the Q-value in the target network. The weights of the main network update the target network periodically, so the learning would be more stable.

Applications of Reinforcement Learning

RL finds applications in various domains in wide ranges.

- Robotics: The training of robots to perform tasks in complex and dynamic environments, such as grasping objects, navigating obstacles, and collaborating with humans.

- Game playing: Development of AI agents that can play games at superhuman levels, such as mastering Atari games, defeating world champions in Go, and creating realistic non-player characters in video games.

- Optimize control systems: Used for industrial processes and traffic management or other applications in controlling robots to manufacture, in optimizing the traffic flow in the city, managing energy consumption, and so forth.

- Finance: Develop trading algorithms and portfolio optimization strategies to make the most possible returns and manage risks in a financial market.

- Healthcare: Personalized treatment plans and assistive technologies, like personalized drug dosage recommendations, prosthetic limbs tailored to individual needs, and robots that assist with surgery or patient care.

- Recommendation systems: Improving recommendation systems by learning user preferences and providing personalized recommendations for products, services, and content.

Challenges and Future Directions

Although RL has great promise, challenges remain to be overcome:

- Sample efficiency: RL algorithms usually require a huge amount of data to learn efficiently, which may be costly and time-consuming to collect.

- Exploration-exploitation trade-off: Finding the right balance between exploring new actions and exploiting known good actions is still a problem.

- Generalization: Generalization of learning of RL agents in new situations and environments is an important requirement for real-world applications.

Despite all these challenges, RL is an ever-evolving field with continuous research and development. Some of the future directions include the following:

- Improving more efficient algorithms for RL: This involves reducing the data requirements for learning through prior knowledge incorporation, more complex exploration strategies, and hierarchical RL methods.

- Improving generalization: Enabling the RL agent to adapt to new environments and tasks through transfer learning, meta-learning, and more robust representations of the environment.

- Combining reinforcement learning with other machine learning algorithms: Combining RL with other areas of machine learning, such as deep learning, and natural language processing, creating more intelligent or versatile agents.

Conclusion

Reinforcement learning has blossomed into becoming a powerful framework for the formation of intelligent machines that can interact with their surrounding environment to understand and learn to adapt. The unique ability of learning through trial and error, combined with deep learning advancement, has helped it achieve a number of groundbreaking feats in all sorts of fields—from game playing and robotics to finance and healthcare.

Challenges continue to exist within the realms of sample efficiency, exploration-exploitation balance, and generalization; however, continued research and development promise to resolve these challenges and unlock RL’s full potential. As the field matures, we can expect even more innovative applications of RL to shape the future of artificial intelligence and its impact on our lives.

But now, it’s time to be quite serious about how these technologies will unfold. Ethical implications and societal influences on RL must be planned so that all-important issues such as safety, fairness, and accountability are taken into account before unleashing technologies such as RL on the world without hesitation.

Take Your Learning Further!

You can also read about LangChain’s AI Email Assistant (EAIA) to discover how it can help you get more done and enhance your productivity.