Mixture of Experts (MoE): Inside Modern LLM Architectures

In recent years, the rise of Mixture of Experts (MoE) architecture has reshaped large language models (LLMs), enabling advancements in computational efficiency and scalability. Originally proposed by researchers like Noam Shazeer, MoE architecture leverages specialized “experts” for processing different types of data inputs. This approach has proven valuable for scaling models while managing computational demands effectively.

MoE matters because it supports the scaling of large language models and even computer vision applications without a linear increase in computational cost, offering a practical path for handling massive datasets with fewer resources. Today, models like Google’s Switch Transformer and GLaM have successfully integrated MoE, proving its potential in applications such as GPT-4 and Claude.

Table of Contents

Technical Foundation

Basic Architecture Explanation

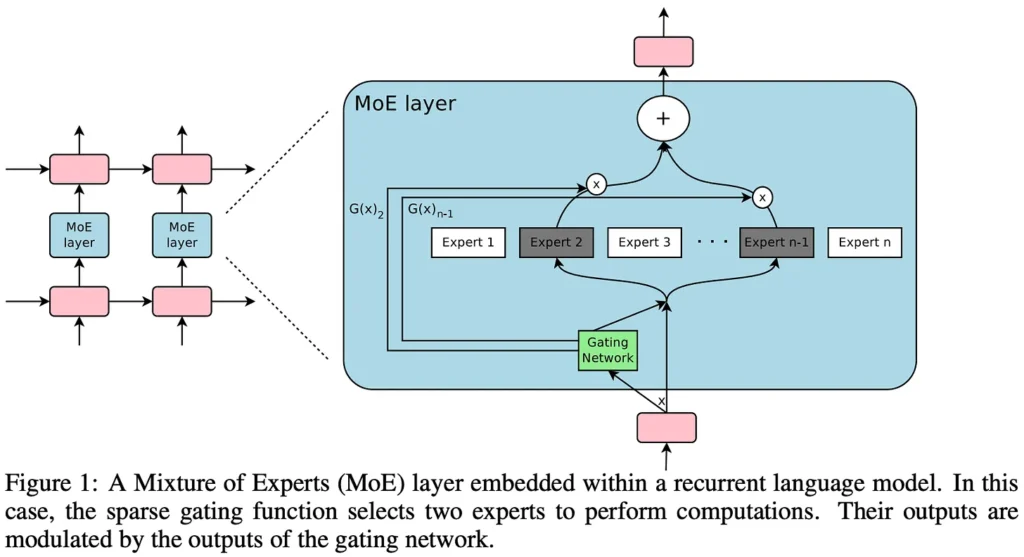

MoE models consist of multiple smaller neural networks, known as experts, and a gating network that routes each input to the most relevant experts. Unlike traditional dense models where all parts of the model are active at all times, MoE selectively activates a subset of experts based on the data’s requirements. This technique is called sparse activation, enabling efficiency and faster processing.

Expert Networks and Gating

Each expert in an MoE network is optimized to handle a specific subset of tasks. The gating network, which plays a central role in MoE, decides which experts will process each input based on factors like input type and required processing complexity. This routing function allows MoE models to dynamically allocate computational resources, improving both efficiency and scalability.

Comparison with Traditional Transformers

Traditional transformer models are dense, meaning that every part of the model is engaged for all inputs, regardless of their complexity. In contrast, these models are designed for sparse activation, meaning only selected experts are engaged for each input. This selective activation enables MoE models to handle large-scale tasks with fewer active parameters, overcoming limitations of dense transformers by improving computational efficiency and supporting greater scalability without proportional increases in computational demand.

check out about Implementing RAG Systems with Unstructured Data: A Comprehensive Guide

Benefits and Challenges

Computational Efficiency

One of the major advantages of MoE is its computational efficiency. By activating only a relevant subset of experts, it reduces computational overhead. For instance, models like Switch Transformer achieve significant efficiency gains by minimizing the number of active parameters, leading to faster inference times and lower operational costs.

Scalability Advantages

MoE’s architecture enables the expansion of model capacity by adding more experts without requiring a proportional increase in computational resources. This scalability makes it an attractive solution for handling complex data at scale, as more experts can be integrated to support diverse tasks while keeping computational costs stable.

Training Challenges

Despite its benefits, its architecture presents training challenges. Balancing workloads across experts to prevent bottlenecks is a complex task. Additionally, fine-tuning the gating network to avoid over-reliance on specific experts and ensuring stable model performance across diverse inputs require careful optimization. Techniques like noisy gating and load balancing strategies are used to improve expert distribution, though they add layers of complexity to the training process.

Implementation Complexities

Implementing MoE models requires advanced strategies for managing the gating mechanism, balancing load distribution, and ensuring each expert functions optimally within the network. Many practitioners use top-k gating (selecting the k most relevant experts for each input) and adaptive load-balancing methods to fine-tune expert utilization. However, these optimizations demand rigorous tuning and are often more challenging than standard dense transformer implementations.

Real-world Applications

Case Studies of MoE in Production

Notable applications of MoE include Google’s Switch Transformer and Mixtral. These models have demonstrated high efficiency in NLP tasks, achieving accuracy on par with dense transformers while reducing computational costs by up to 90%. For instance, Mixtral, specifically tailored for multilingual tasks, has shown improvements in processing speed and resource efficiency.

Performance Metrics

Performance metrics for MoE models typically emphasize throughput, latency, and cost-efficiency. Compared to dense transformers, MoE models tend to show superior performance on these fronts, especially in scenarios involving real-time processing or large datasets. These models are increasingly favored in cloud environments where resource efficiency directly impacts operating costs.

Cost-Benefit Analysis

The cost-benefit analysis of using MoE models often highlights substantial savings in cloud costs due to reduced computational demand. In environments like Amazon Web Services (AWS) or Google Cloud, where operational expenses scale with usage, these models enable companies to achieve desired performance levels with lower costs. However, the initial investment in implementing and tuning MoE architectures can be higher than traditional models due to their complexity.

Future Implications

Emerging Trends

Future MoE advancements are likely to focus on refining gating networks and further optimizing load balancing for improved efficiency. Another trend is conditional computation enhancements, which make it more versatile by allowing experts to specialize more effectively for diverse tasks, including those outside NLP, like computer vision and autonomous systems.

Research Directions

Current research in MoE explores methods to refine the specialization of experts and optimize conditional computation for more nuanced applications. For instance, researchers are investigating ways to improve fine-tuning processes so that these models can adapt to specific industry requirements more efficiently. This will be especially important as MoE architectures are applied beyond natural language processing to fields like computer vision and real-time analytics.

Industry Adoption Predictions

As MoE’s cost efficiency and scalability become more evident, industry adoption is expected to grow. Industries requiring high computational throughput but with limited budgets—such as retail, finance, and healthcare—are likely to lead the way in MoE adoption, particularly as pre-trained MoE models become more accessible and adaptable to various domains.

Practical Implementation

Best Practices

Implementing MoE models successfully requires careful planning and tuning. Some recommended practices include:

- Load-balancing Tuning: Prevents overuse of particular experts, which can degrade performance.

- Gating Network Optimization: Ensures the gating mechanism routes inputs effectively without causing bottlenecks.

- Avoiding Over-Specialization: Over-specialized experts can reduce the model’s flexibility. A balanced approach is essential to maintain performance across varied input types.

Common Pitfalls to Avoid

A few common challenges include:

- Load Distribution Issues: Uneven load distribution can lead to expert bottlenecks, impacting model performance.

- Complexity in Gating Mechanisms: The gating network can become overly complex, requiring more computational resources than necessary.

- Overfitting Experts: Specialization in experts can lead to overfitting if the gating network doesn’t balance input allocation properly.

Conclusion

The Mixture of Experts architecture represents a transformative advancement in Large language model design, balancing computational efficiency with scalability in a way that makes it well-suited for modern applications. While its implementation presents challenges, MoE’s potential to optimize costs and improve performance has already shown promise in production environments. As the technology advances, it is likely to play a significant role in AI and machine learning applications across industries, from NLP to real-time analytics.