DeepSeek-R1 vs OpenAI o1: A 2025 Showdown of Reasoning Models

DeepSeek-R1 vs. OpenAI o1: A Comparative Analysis of Reasoning AI Models

DeepSeek-R1 and OpenAI o1 are leading examples of a new generation of large language models (LLMs) that go beyond simple text generation and prioritize complex reasoning capabilities. These models have garnered significant attention for their ability to tackle intricate problems in various domains, including mathematics, coding, and general knowledge. This article provides a comprehensive comparison of DeepSeek-R1 and OpenAI o1, delving into their architecture, training methodologies, capabilities, limitations, and potential use cases.

How does DeepSeek-R1 work?

DeepSeek-R1

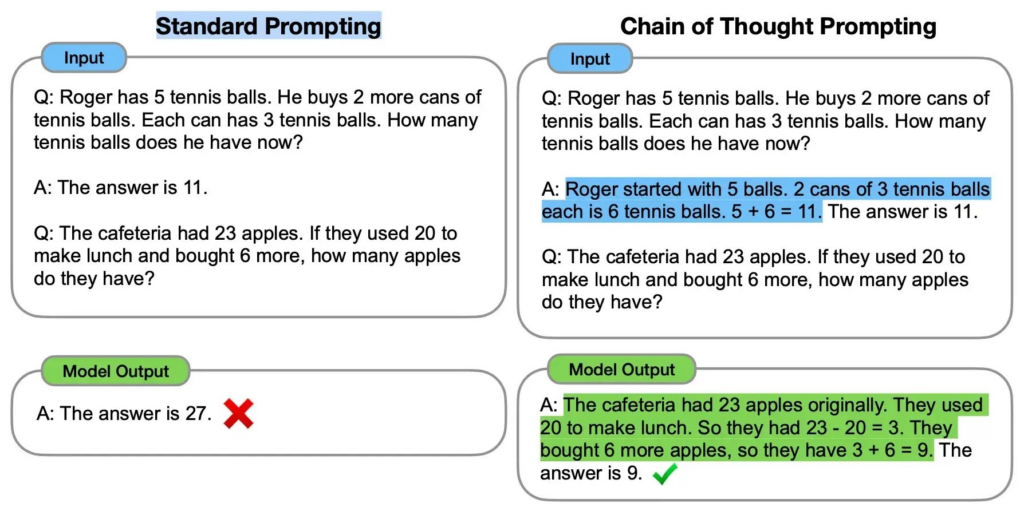

DeepSeek-R1 utilizes a Mixture-of-Experts (MoE) approach, activating only 37 billion of its 671 billion parameters for each token processed. This efficient design allows the model to deliver high performance without the computational overhead typically associated with models of this scale. Furthermore, DeepSeek-R1 employs a Chain of Thought (CoT) approach, generating a series of reasoning steps before arriving at the final answer. This enhances the model’s accuracy and provides valuable insights into its decision-making process. With a maximum context length of 128,000 tokens, DeepSeek-R1 can effectively handle complex, multi-step reasoning tasks.

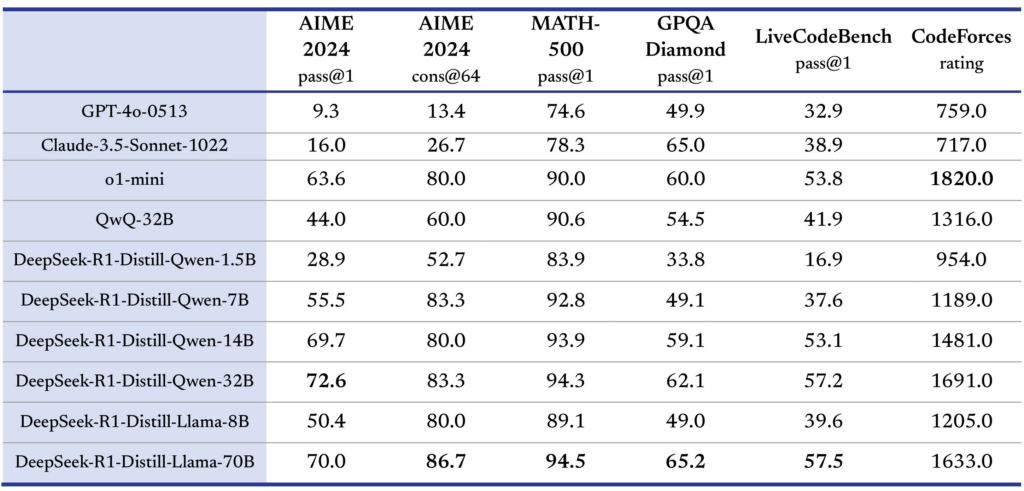

DeepSeek has also released six smaller distilled models derived from DeepSeek-R1, with the 32B and 70B parameter versions demonstrating competitive performance. This allows for more efficient deployment and broader accessibility of the model’s reasoning capabilities.

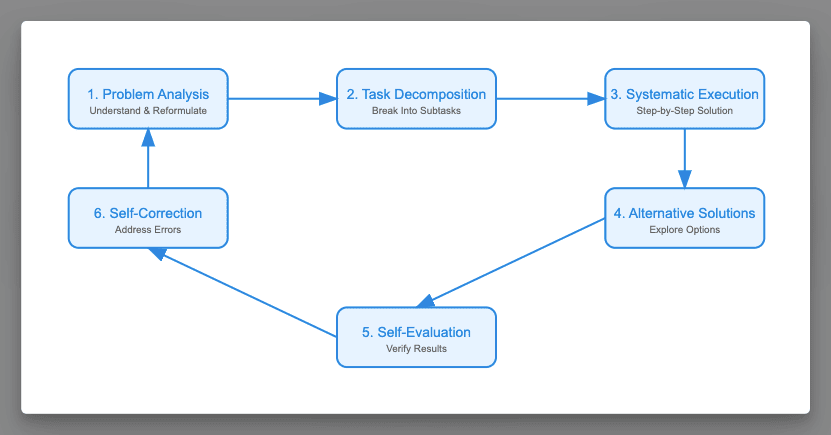

DeepSeek-R1’s training involves a multi-stage pipeline that combines reinforcement learning (RL) with supervised fine-tuning (SFT). This represents a significant departure from traditional training methods and a potential breakthrough in AI research. Instead of relying heavily on curated examples for supervised learning, DeepSeek-R1 learns to reason through pure reinforcement learning. The model starts with a “cold-start” phase using carefully selected data and then undergoes multi-stage RL, which refines its reasoning abilities and improves the readability of its outputs. This approach allows the model to develop a deeper understanding of the underlying logic and problem-solving strategies.

OpenAI o1

OpenAI o1 also leverages chain-of-thought reasoning, enabling it to decompose problems systematically and explore multiple solution paths. OpenAI o1 models are new large language models trained with reinforcement learning to perform complex reasoning. A key architectural feature of o1 is its sophisticated three-tier instruction system. Each level in this hierarchy has explicit priority over the levels below, which helps prevent conflicts and enhances the model’s resistance to manipulation attempts. This hierarchical approach, combined with the model’s ability to understand context and intent, suggests a future where AI systems can reason about their actions and consequences, potentially leading to genuinely thoughtful artificial intelligence.

OpenAI o1’s training heavily relies on RL combined with chain-of-thought reasoning. This approach enables the model to “think” through problems step-by-step before generating a response, significantly improving its performance on tasks that require logic, math, and technical expertise. The training process involves guiding the model along optimal reasoning paths, allowing it to recognize and correct errors, break down complex steps into simpler ones, and refine its problem-solving strategies.

Chain-of-Thought Reasoning and its Impact

Both DeepSeek-R1 and OpenAI o1 utilize chain-of-thought (CoT) reasoning as a core element of their architecture and training. This approach involves generating a series of intermediate reasoning steps before arriving at a final answer. CoT reasoning enhances the transparency of the models’ decision-making processes and allows users to understand the logic behind their responses. This is particularly valuable in applications where explainability and trustworthiness are crucial, such as education, research, and complex decision-making.

DeepSeek-R1’s 97.3% MATH-500 Accuracy vs. OpenAI o1’s 89%

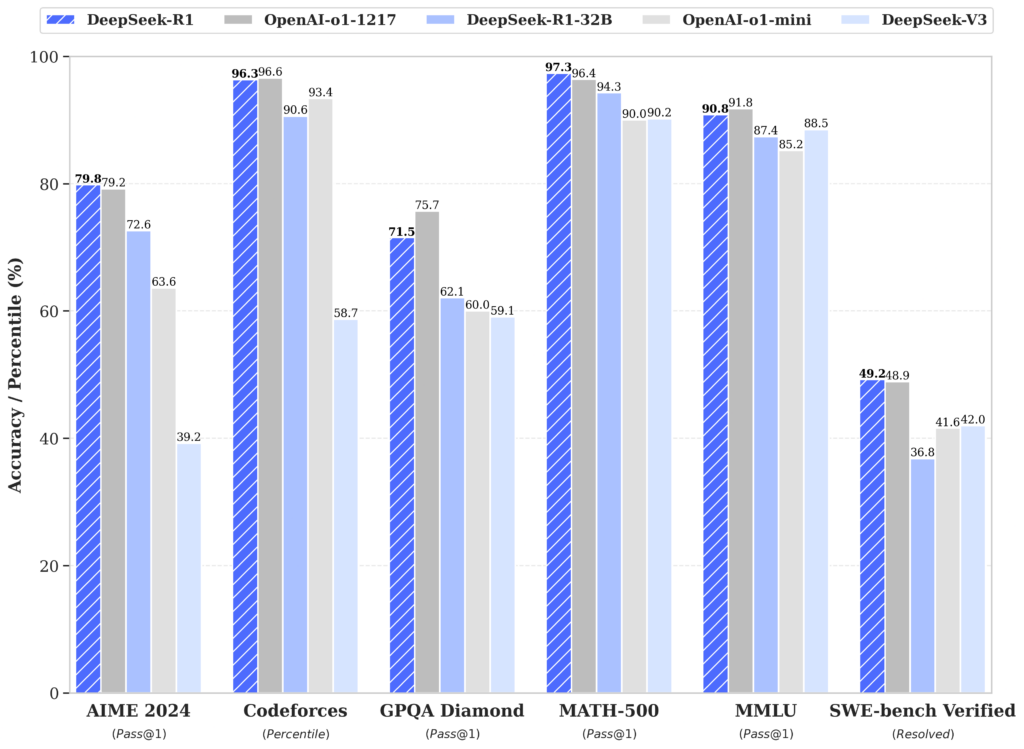

DeepSeek-R1 and OpenAI o1 excel in tasks that require reasoning and problem-solving skills. Here’s a comparative analysis of their capabilities based on various benchmark results:

- AIME 2024 The American Invitational Mathematics Examination (AIME) 2024 evaluates advanced multi-step mathematical reasoning. DeepSeek-R1 achieves a 79.8% accuracy rate on this benchmark, slightly surpassing OpenAI o1-1217, which scores 79.2%. This highlights DeepSeek-R1’s strong performance in tackling challenging mathematical problems.

- Codeforces Codeforces is a platform that hosts competitive programming contests. DeepSeek-R1 achieves a 96.3 percentile ranking on Codeforces, demonstrating expert-level coding abilities. OpenAI o1 also performs exceptionally well, ranking in the 89th percentile. Both models showcase their proficiency in coding and algorithmic problem-solving.

- MATH-500 MATH-500 is a benchmark that tests models on diverse high-school-level mathematical problems requiring detailed reasoning. DeepSeek-R1 takes the lead with an impressive 97.3% accuracy, slightly surpassing OpenAI o1-1217’s 96.4%. This further emphasizes DeepSeek-R1’s strength in mathematical reasoning.

- SWE-bench Verified SWE-bench Verified evaluates reasoning in software engineering tasks. DeepSeek-R1 performs strongly with a score of 49.2%, slightly ahead of OpenAI o1-1217’s 48.9%. This result positions DeepSeek-R1 as a strong contender in specialized reasoning tasks within the software engineering domain.

- GPQA Diamond and MMLU For factual reasoning, GPQA Diamond measures the ability to answer general-purpose knowledge questions. DeepSeek-R1 scores 71.5%, trailing OpenAI o1-1217, which achieves 75.7%. On MMLU, a benchmark that evaluates multitask language understanding across various disciplines, OpenAI o1-1217 slightly edges out DeepSeek-R1, scoring 91.8% compared to DeepSeek-R1’s 90.8%. These results indicate a slight advantage for OpenAI o1-1217 in factual reasoning and general knowledge understanding.

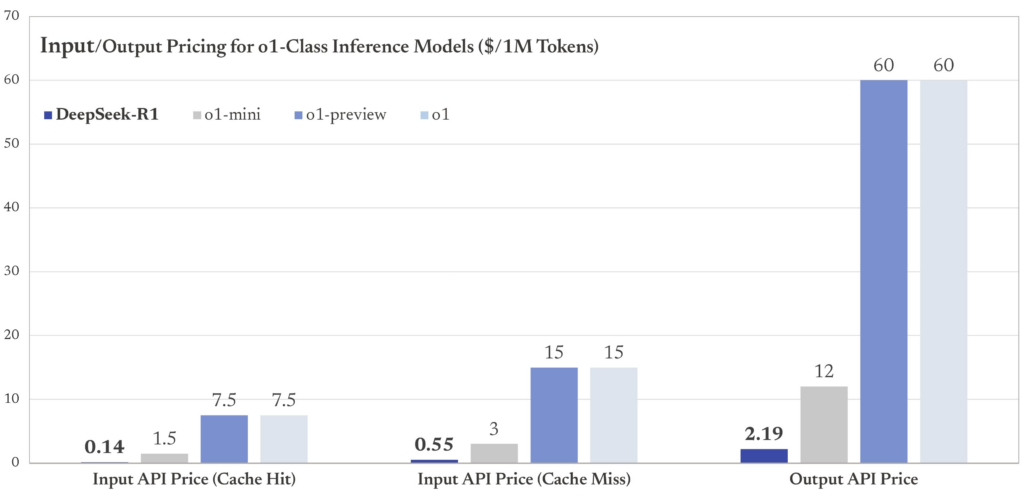

Why DeepSeek Costs 0.14/M Tokens vs OpenAI’s 30

DeepSeek-R1 offers a significantly more cost-effective solution compared to OpenAI o1. DeepSeek-R1’s API pricing follows a tiered structure, with costs varying based on factors such as cache hits and output token usage. For instance, the cost for 1 million input tokens ranges from $0.14 for cache hits to $0.55 for cache misses, while the cost for 1 million output tokens is $2.19. In contrast, OpenAI o1’s pricing is considerably higher. Input costs range from $15 to $16.50 per million tokens, and output costs can reach $60 to $66 per million tokens. This substantial price difference makes DeepSeek-R1 a more attractive option for users and developers seeking cost-efficient reasoning capabilities.

When to Choose OpenAI o1 Over DeepSeek-R1

While both DeepSeek-R1 and OpenAI o1 exhibit impressive capabilities, they also have limitations:

DeepSeek-R1

- Occasional Timeouts and Errors: DeepSeek-R1 may experience occasional timeouts and produce invalid SQL queries.

- Sensitivity to Prompts: The model’s performance can be sensitive to different prompts.

- Limited Language Support: DeepSeek-R1 is primarily optimized for English and Chinese.

OpenAI o1

- Limited Context Length: OpenAI o1 has a shorter context length compared to DeepSeek-R1, which has a context length of 128k tokens.

- Restricted Parameter and Message Support: During its beta phase, OpenAI o1 has limitations on parameters and message types that can be used with the API.

- High Operational Costs: OpenAI o1’s operational costs are significantly higher than DeepSeek-R1.

- Latency: OpenAI o1 can experience latency issues, especially with complex tasks.

DeepSeek-R1 Roadmap: Legal Analysis and Ethical AI

Both DeepSeek-R1 and OpenAI o1 are expected to undergo further development and improvements:

DeepSeek-R1

- Community-Driven Improvements: DeepSeek-R1’s open-source nature allows for community-driven improvements and customization.

- Enhanced Reasoning and Accessibility: DeepSeek-R1 is likely to see further enhancements in its reasoning capabilities and accessibility.

- Wider Adoption: The cost-efficiency and open-source nature of DeepSeek-R1 could lead to wider adoption in various applications.

OpenAI o1

- Increased Integration: OpenAI o1 is expected to be integrated into more applications and industries.

- Improved Efficiency: OpenAI is likely to focus on improving the efficiency and reducing the latency of o1.

- Beautiful interaction transitions

- Fine-tuning Enhancements: Azure OpenAI Service is introducing new fine-tuning features, including support for the o1-mini model through reinforcement fine-tuning. This allows organizations to customize AI models to their specific needs, enhancing performance and reducing costs.

Try DeepSeek-R1’s API for 10K free tokens

DeepSeek-R1 and OpenAI o1 are powerful reasoning AI models with distinct strengths and weaknesses. DeepSeek-R1 stands out for its cost-effectiveness, open-source nature, and strong performance in mathematics and coding. OpenAI o1 excels in educational tasks, scientific reasoning, and complex problem-solving. The choice between the two models depends on specific needs and priorities. DeepSeek-R1 is a compelling option for users seeking affordability and customization, while OpenAI o1 offers advanced reasoning capabilities for demanding tasks.

The open-source nature of DeepSeek-R1 fosters greater transparency, community involvement, and the potential for faster development compared to closed models like OpenAI o1. This has significant implications for the future of the tech industry and AI/ML development, as it promotes collaboration and accelerates innovation. On the other hand, OpenAI o1, with its sophisticated reasoning capabilities and potential for tool use, could become a dominant force in AI applications. As both models continue to evolve, they are poised to shape the future of AI and its applications across various domains. The increasing competition between open-source and proprietary approaches is driving rapid innovation in the field of reasoning AI models, leading to more powerful and accessible solutions for a wide range of users.