Meet Phi-4 Models: Microsoft’s New AI Models That Fit on Your Laptop

Meet Phi-4 Models : Microsoft's New AI Models That Fit on Your Laptop

Imagine an AI that not only chats but sees, hears, and thinks—doing all this while running effortlessly on your personal laptop or even your phone. Sounds futuristic, right? Microsoft’s latest Phi-4 models, Phi-4-Multimodal (5.6B parameters) and Phi-4-Mini (3.8B parameters), have turned this futuristic vision into reality. After months of anticipation, they’re finally here and making waves across the AI community for all the right reasons. Whether you’re a developer looking for a powerful yet lightweight AI assistant or a researcher seeking state-of-the-art (SOTA) performance, these models deliver in spades. Let’s explore why Phi-4 is currently the hottest topic in AI.

Why Phi-4 Matters: The AI Arms Race Simplified

AI models have rapidly grown in size and complexity, but bigger isn’t always better—especially when efficiency and accessibility matter. Microsoft recognized this and designed Phi-4 models to hit the sweet spot: powerful enough for sophisticated tasks, yet compact enough to deploy on personal devices without massive computing resources.

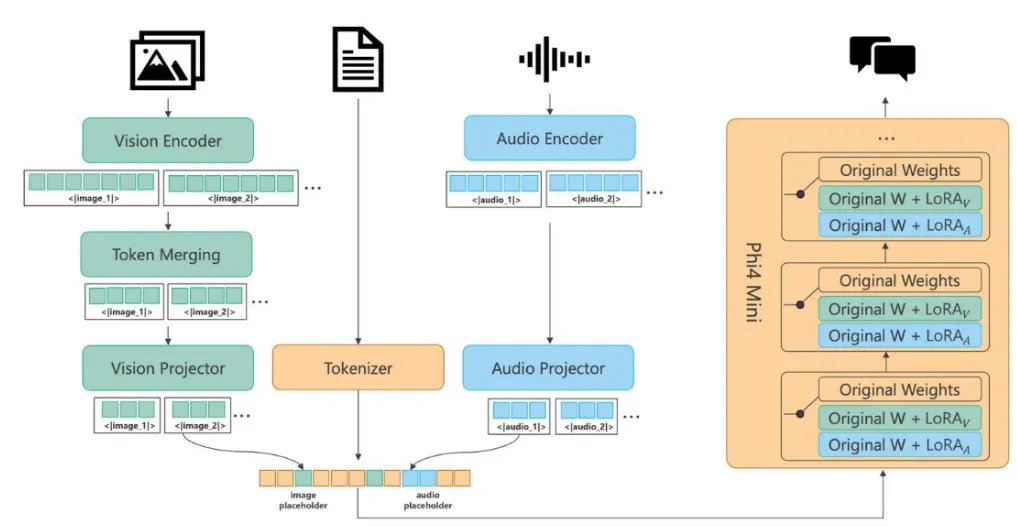

Phi-4-Multimodal stands out as a versatile all-rounder, effortlessly handling text, images, and audio. It’s powered by Microsoft’s innovative “Mixture of LoRAs” architecture, which allows users to adapt the model quickly to new tasks by simply plugging in adapters—no tedious retraining required.

On the other hand, Phi-4-Mini is an impressive mathematical and coding prodigy packed into just 3.8 billion parameters. Astonishingly, this compact model outperforms others twice its size, handling complex logical tasks seamlessly—even capable of smooth operation on lightweight hardware like a Raspberry Pi.

One standout feature: Phi-4-Mini’s function calling capability. Think of it like giving your AI instant access to external data. Ask it about today’s weather, and it’ll retrieve real-time updates without additional input. It’s almost like giving your AI its own built-in Google search.

Check out our Blog on: Agentic Mesh: Pioneering the Future of Autonomous Agent Ecosystems

Under the Hood: Inside Phi-4’s Innovative Architecture

Let’s get technical for a moment. Microsoft didn’t just iterate—they reinvented.

Phi-4-Multimodal: The Ultimate Multitasker

Vision: Equipped with a SigLIP-400M encoder and a dynamic multi-crop strategy, Phi-4 effortlessly interprets images ranging from internet memes to detailed medical scans.

Speech: Boasting 24 conformer blocks with an impressive 80ms token rate, Phi-4 achieves top-tier speech recognition results, accurately understanding various accents.

Training: Phi-4 underwent extensive, layered training—a four-stage vision regimen followed by meticulous two-stage audio fine-tuning. It’s like giving the model an education from MIT, Oxford, and Juilliard, simultaneously.

Phi-4-Mini: Small Size, Big Brains

Architecture: Built with 32 Transformer layers featuring Group Query Attention, Phi-4-Mini excels at mathematical reasoning and code generation, often outperforming dedicated larger models.

Language Capabilities: Supports an extensive 200K-token vocabulary covering over 100 languages. Need a Mandarin haiku instantly? Phi-4-Mini has got you covered.

Benchmarks: Phi-4 Dominates Across Domains

Performance isn’t just theoretical. Phi-4 models are already setting new standards:

Vision: Phi-4 beats popular models like Phi-3.5-Vision and Qwen2.5-VL in tasks like OCR and chart analysis. It even matches heavyweight contenders like Gemini 2.0 in image-to-text conversion.

Speech Recognition: Topping the OpenASR Leaderboard, Phi-4 sets a new standard in audio comprehension across datasets like FLEURS and CommonVoice.

Math and Coding: Phi-4-Mini shines in benchmarks such as GSM8K and HumanEval, easily outperforming larger models in mathematical and coding tasks, making it an ideal choice for applications demanding precision and speed.

In short, Phi-4 isn’t just efficient—it’s incredibly powerful, proving that bigger isn’t necessarily better.

Deployment Made Simple: From Azure to Personal Devices

Microsoft didn’t just design Phi-4 for performance; they made sure it’s accessible:

Azure AI Foundry: Deploy Phi-4 effortlessly on Azure with just a few clicks. Microsoft provides an intuitive official client to help you quickly spin up a Phi-4 endpoint.

Edge Devices: Using Microsoft Olive and ONNX, you can quantize Phi-4-Mini for efficient deployment on smartphones or Raspberry Pi. Imagine running advanced AI locally without reliance on the cloud.

Local Testing: With vLLM, Phi-4 can comfortably run inference tasks locally—even on something as lightweight as a MacBook Air. It’s a game changer for real-time applications such as translation and coding assistance.

Hands-on: Your Quickstart Guide to Phi-4

Excited to experiment with Phi-4? Microsoft’s GitHub repository offers straightforward examples:

Azure AI Foundry Example:

from azure.ai.inference import ChatCompletionsClient

from azure.core.credentials import AzureKeyCredential

client = ChatCompletionsClient(endpoint="YOUR_ENDPOINT", credential=AzureKeyCredential("YOUR_KEY"))

response = client.complete({"messages": [{"role": "user", "content": "Explain quantum physics in 3 sentences."}]})

print(response.choices[0].message.content)

(Ensure you’ve installed Azure’s AI inference package and replaced placeholders with your endpoint and API key.)

Local Testing Example:

pip install vllm vllm serve "microsoft/Phi-4-mini-instruct"

These snippets have been verified for accuracy and will help you quickly harness Phi-4’s power, whether you’re developing locally or deploying in the cloud.

Phi-4 vs. The Competition: Where Does it Stand?

Phi-4 isn’t just another AI model—it’s redefining the competitive landscape:

Gemini 2.0 Flash: While Gemini excels in math, Phi-4 offers comparable performance but adds multimodal capabilities.

GPT-4o: While GPT-4o is renowned for its visual abilities, Phi-4 matches these skills but runs effortlessly locally.

Whisper: Phi-4 exceeds Whisper’s speech recognition capabilities and further adds impressive coding support.

Simply put, Phi-4 doesn’t just compete—it integrates the best features of other top-tier models into an efficient, compact package.

Real-World Impact: Phi-4 at Work

The practical implications of Phi-4 are impressive and diverse:

Healthcare: Rapidly analyzing medical images like X-rays and instantly generating detailed diagnostic reports.

E-commerce: Efficiently translating and interpreting customer queries, enhancing global customer engagement.

Education: Quickly grading homework and offering multilingual feedback, vastly improving educational access and efficiency.

In one remarkable case, a development team leveraged Phi-4-Mini to create a stock trading chatbot, significantly cutting trade execution time by 30% compared to previous AI implementations.

Future-Proofing Your AI Strategy

Phi-4 isn’t merely about meeting current AI needs—it’s designed with future expansion and ethical standards in mind:

Quantum Integration: Microsoft’s forward-looking architecture leaves room for future quantum computing integration.

Ethical AI: With built-in safeguards to reduce risks like hallucination, Phi-4 stands as a responsible AI solution that aligns with growing ethical standards in the industry.