7 Hugging Face Features You Should Know

7 Hugging Face Features You Should Know

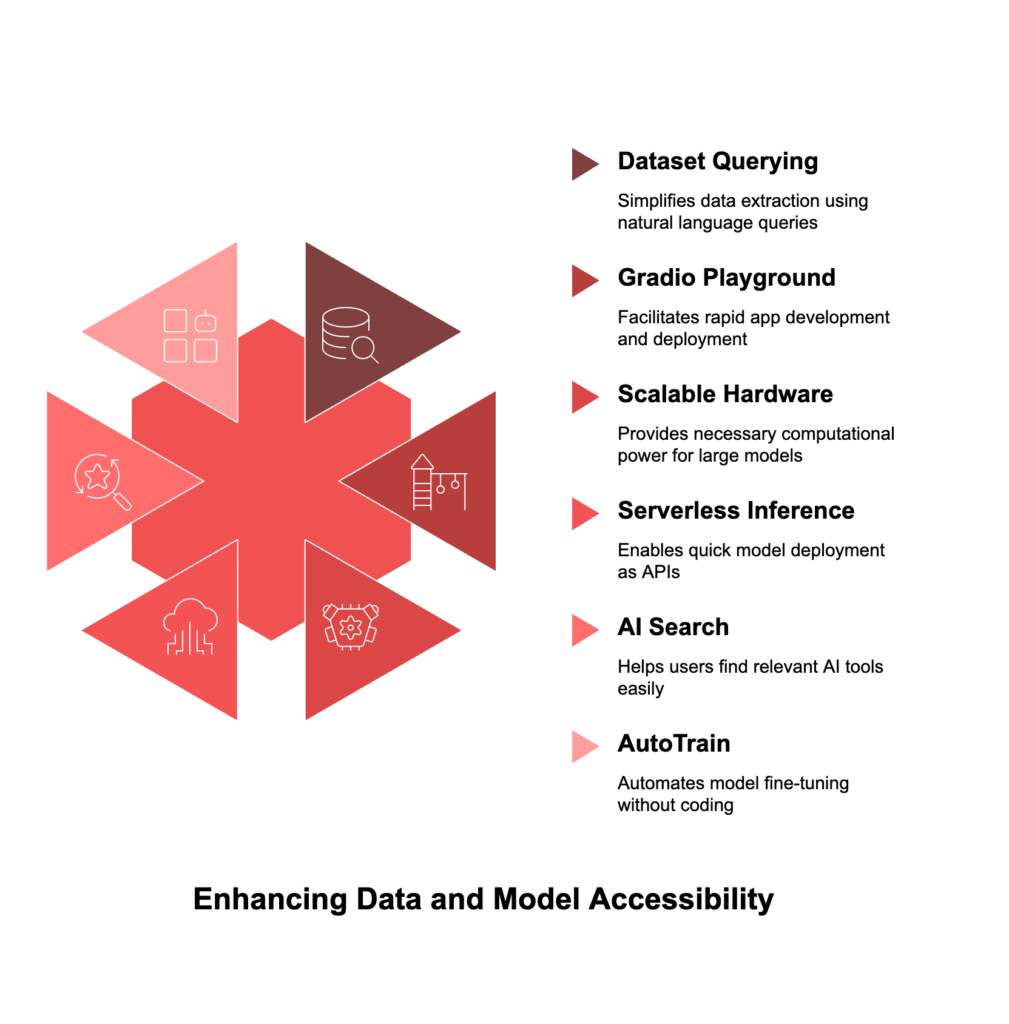

Hugging Face isn’t just a hub for AI models—it’s a full-stack ecosystem for building, deploying, and scaling AI projects. While its vast library of open-source models and datasets is legendary, the platform’s lesser-known tools can supercharge your workflow. Here are seven features one can’t live without:

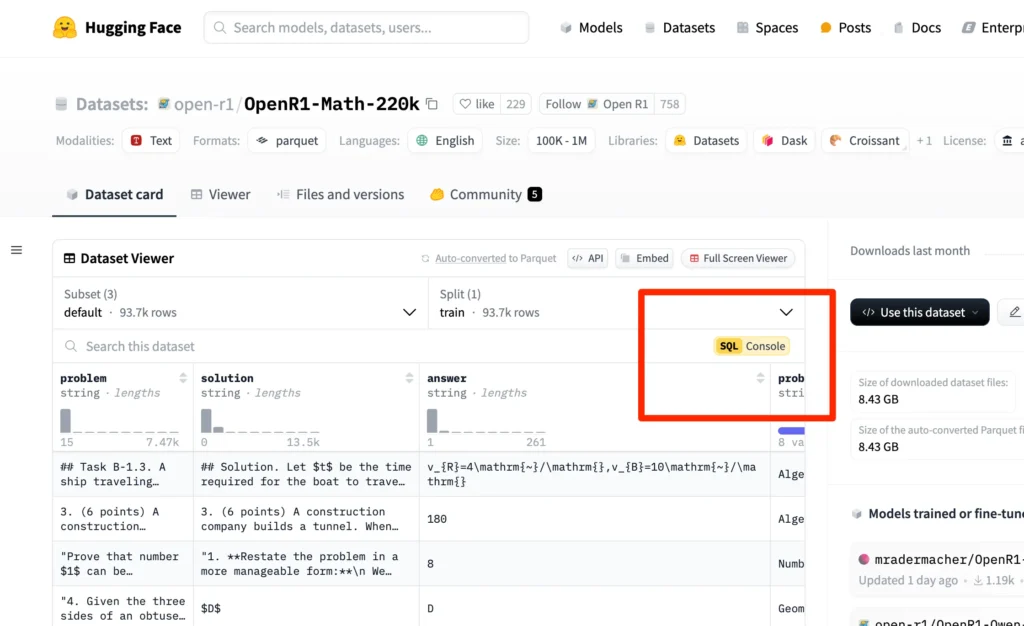

1 Dataset Querying with DuckDB

Navigating Hugging Face’s massive datasets is effortless with DuckDB. Use natural language to generate SQL queries and extract insights instantly. For example, ask, “How many examples are in the ‘wikitext’ dataset?” and get answers without writing a single line of SQL. It’s like having a data scientist in your pocket.

How It Works:

DuckDB integrates directly with Hugging Face datasets, allowing users to bypass manual SQL scripting. The AI generates optimized queries based on your question, making data exploration accessible even to non-technical users. This feature is particularly useful for large datasets like Wikipedia or IMDB reviews, where manual analysis would be time-consuming.

DuckDB integrates directly with Hugging Face datasets, allowing users to bypass manual SQL scripting. The AI generates optimized queries based on your question, making data exploration accessible even to non-technical users. This feature is particularly useful for large datasets like Wikipedia or IMDB reviews, where manual analysis would be time-consuming.

Example Use Case:

A marketing team wants to analyze customer sentiment trends. By asking DuckDB, “Show me the most common keywords in the ‘twitter-sentiment’ dataset,” they instantly receive a list of trending topics without writing complex code.

A marketing team wants to analyze customer sentiment trends. By asking DuckDB, “Show me the most common keywords in the ‘twitter-sentiment’ dataset,” they instantly receive a list of trending topics without writing complex code.

Technical Deep Dive:

DuckDB leverages Hugging Face’s dataset metadata to map natural language questions to SQL logic. For instance, if you ask, “What’s the average rating in the ‘yelp-reviews’ dataset?” DuckDB translates this into:

DuckDB leverages Hugging Face’s dataset metadata to map natural language questions to SQL logic. For instance, if you ask, “What’s the average rating in the ‘yelp-reviews’ dataset?” DuckDB translates this into:

SELECT AVG(rating) FROM yelp_reviews; This abstraction saves hours of manual query writing and debugging.

Why It Matters:

For organizations with limited data science resources, DuckDB democratizes data access. Small businesses can now analyze sentiment, track product performance, or identify market trends without hiring SQL experts

For organizations with limited data science resources, DuckDB democratizes data access. Small businesses can now analyze sentiment, track product performance, or identify market trends without hiring SQL experts

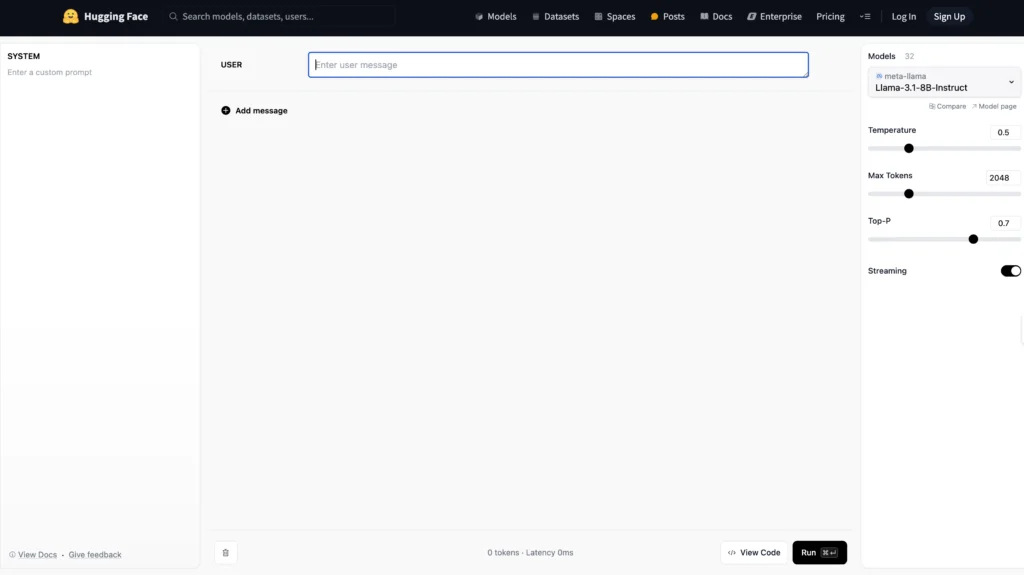

2 The Playground: Build & Deploy with Zero Setup

The Gradio Playground is a web-based editor where you can draft, test, and deploy apps instantly. Stuck? Let the AI auto-generate code for you. Click “deploy,” and your app goes live—publicly or privately. Perfect for rapid prototyping.

Key Features:

- AI-Powered Code Generation: Describe your app’s functionality, and the Playground generates a starter template.

- One-Click Deployment: Share your app via a public URL or keep it private for enterprise use.

- Collaboration Tools: Invite team members to co-edit and test in real time.

Example:

A developer builds a text-to-image app in the Playground, deploys it, and shares the link with stakeholders for feedback—all within an hour.

A developer builds a text-to-image app in the Playground, deploys it, and shares the link with stakeholders for feedback—all within an hour.

User Testimonial:

“Gradio Playground cut our prototyping time by 80%,” says Maria, a data scientist at a fintech startup. “We iterated on a fraud detection model’s UI in days, not weeks.”

“Gradio Playground cut our prototyping time by 80%,” says Maria, a data scientist at a fintech startup. “We iterated on a fraud detection model’s UI in days, not weeks.”

Advanced Capabilities:

The Playground supports custom themes, API integrations, and A/B testing. For instance, a healthcare app can integrate with FHIR APIs to pull patient data, all within the Playground’s interface.

The Playground supports custom themes, API integrations, and A/B testing. For instance, a healthcare app can integrate with FHIR APIs to pull patient data, all within the Playground’s interface.

3 Scalable Hardware for Demanding Models

Run resource-heavy models seamlessly:

- ZeroGPU: Shared GPU access (~$10/month) for lightweight apps. Ideal for small teams or hobbyists.

- Dedicated Hardware: Upgrade to faster GPUs/CPUs for tasks like 3D generation or large LLM inference.

Why It Matters:

Large models like BLOOM or Stable Diffusion require significant computational power. Hugging Face’s hardware options eliminate bottlenecks, allowing developers to focus on innovation rather than infrastructure.

Large models like BLOOM or Stable Diffusion require significant computational power. Hugging Face’s hardware options eliminate bottlenecks, allowing developers to focus on innovation rather than infrastructure.

Enterprise Case Study:

A media company used ZeroGPU to prototype a video captioning app. After securing funding, they scaled to dedicated hardware, reducing inference time from 10 seconds to 0.5 seconds per clip.

A media company used ZeroGPU to prototype a video captioning app. After securing funding, they scaled to dedicated hardware, reducing inference time from 10 seconds to 0.5 seconds per clip.

Pricing Breakdown:

- ZeroGPU: ~$10/month (shared GPU, limited availability).

- Dedicated GPU: ~$50–$200/month (based on usage).

- Custom Clusters: Enterprise plans for multi-node training.

4 Gradio & Spaces: Turn Models into Apps in Minutes

Gradio lets you build interactive web interfaces for ML models with just a few lines of Python code. Deploy these as “Spaces” on Hugging Face, and your prototype becomes a shareable, embeddable app—no backend setup required.

Example Spaces:

- Lumina Image 2.0: Generate art from text prompts.

- MusicGen: Create music based on descriptive inputs.

- Image-to-Story: Upload an image, and an LLM writes a story around it.

Impact:

Spaces democratize AI access. Non-developers can use pre-built apps, while coders focus on iterating, not infrastructure.

Spaces democratize AI access. Non-developers can use pre-built apps, while coders focus on iterating, not infrastructure.

Customization Options:

Spaces support themes, custom domains, and API integrations. For example, a real estate company built a virtual staging app using Lumina Image 2.0 and embedded it on their website.

Spaces support themes, custom domains, and API integrations. For example, a real estate company built a virtual staging app using Lumina Image 2.0 and embedded it on their website.

5 Serverless Inference Endpoints

Deploy models as APIs in seconds. Start with a free tier, then scale to dedicated endpoints for high-traffic apps. Ideal for startups and enterprises alike. Your model goes from local to production-ready in minutes.

How It Works:

- Upload your trained model to the Hugging Face Hub.

- Generate an API endpoint with a single command.

- Integrate the endpoint into your app for real-time inference.

Cost Efficiency:

The free tier supports low-traffic testing, while paid tiers offer SLAs and priority scaling—perfect for apps with fluctuating demand.

The free tier supports low-traffic testing, while paid tiers offer SLAs and priority scaling—perfect for apps with fluctuating demand.

Security Features:

Endpoints support OAuth2, API keys, and HTTPS encryption. Enterprises can enforce role-based access control (RBAC) to restrict usage.

Endpoints support OAuth2, API keys, and HTTPS encryption. Enterprises can enforce role-based access control (RBAC) to restrict usage.

Use Case:

A logistics company deployed a demand forecasting model as an API. Their warehouse management system queries the endpoint daily, reducing overstocking by 25%.

A logistics company deployed a demand forecasting model as an API. Their warehouse management system queries the endpoint daily, reducing overstocking by 25%.

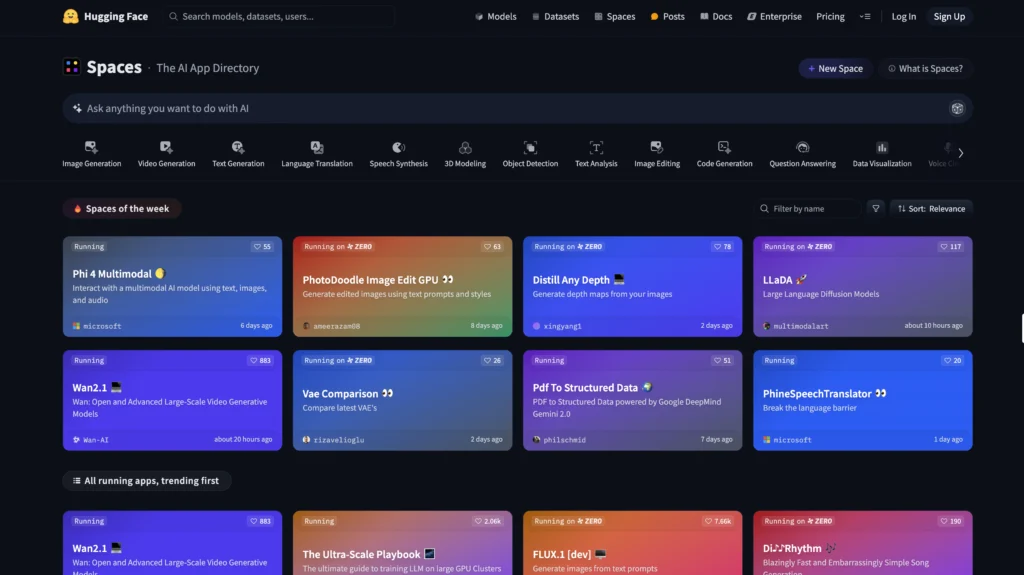

6 Finding a Space with AI

With thousands of Spaces, finding the right tool can be overwhelming. Hugging Face’s AI search solves this—ask naturally, like “find music generation tools,” and it surfaces relevant projects. Think of it as GitHub meets Google, but for AI apps.

Advanced Filtering:

Narrow results by:

Narrow results by:

- Task Type: Text-to-image, speech recognition, etc.

- Popularity: Sort by stars or recent activity.

- Hardware Requirements: Filter by GPU compatibility.

Example:

A developer searching for “real-time translation apps” discovers a Space that integrates with Zoom, built entirely in Gradio.

A developer searching for “real-time translation apps” discovers a Space that integrates with Zoom, built entirely in Gradio.

AI Search Algorithm:

The search engine uses semantic similarity to match queries with Spaces. For instance, “music generation” might return results tagged with “audio synthesis” or “MIDI processing.”

The search engine uses semantic similarity to match queries with Spaces. For instance, “music generation” might return results tagged with “audio synthesis” or “MIDI processing.”

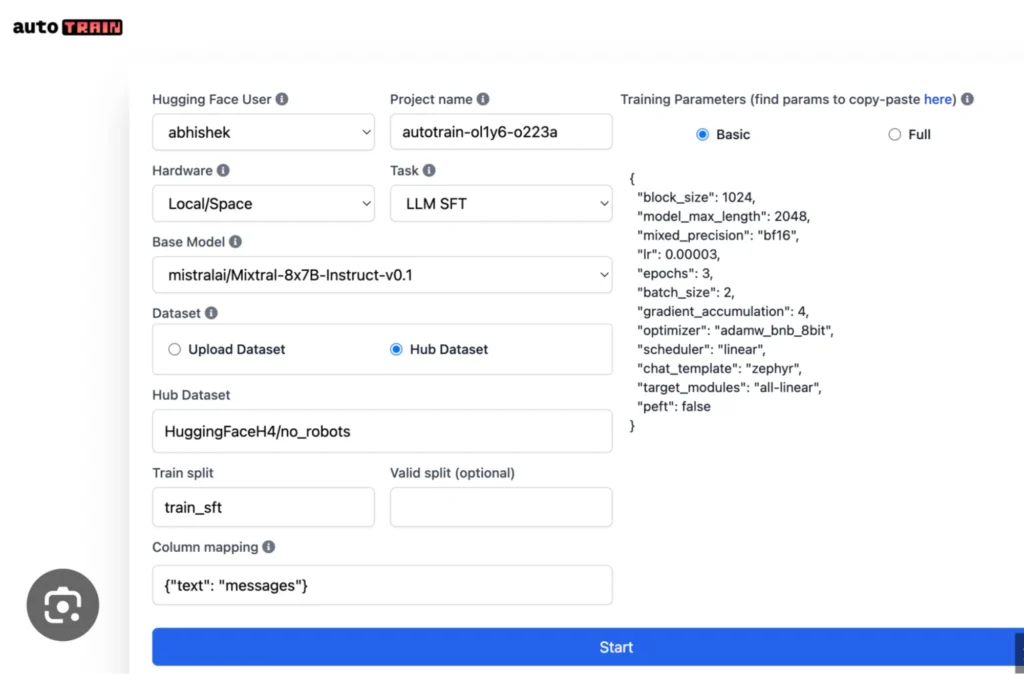

7 AutoTrain: Fine-Tune Models Without Coding

Need a custom model? AutoTrain handles everything from data preprocessing to hyperparameter tuning. Choose your task (text classification, image recognition, etc.), upload data, and let it find the best model. Perfect for teams without ML engineers.

Supported Tasks:

- LLM Fine-Tuning: Adapt models like BERT to domain-specific jargon.

- Tabular Data Regression: Predict sales trends from historical data.

- Question Answering: Train chatbots to answer FAQs accurately.

Enterprise Use Case:

A healthcare company used AutoTrain to fine-tune a model for medical record analysis, reducing manual annotation time by 70%.

A healthcare company used AutoTrain to fine-tune a model for medical record analysis, reducing manual annotation time by 70%.

Workflow:

- Upload data (CSV, JSON, etc.).

- Select a task and model architecture.

- AutoTrain runs experiments and deploys the best-performing model.

Pricing:

AutoTrain charges per training minute, starting at $0.10/min. Large jobs (e.g., LLM fine-tuning) may cost $50–$200.

AutoTrain charges per training minute, starting at $0.10/min. Large jobs (e.g., LLM fine-tuning) may cost $50–$200.

Wrap-Up

Hugging Face bridges the gap between AI research and real-world applications. From prototyping in the Playground to fine-tuning models via AutoTrain, it’s a one-stop shop for developers. And with Spaces, anyone can access cutting-edge AI tools—no coding required.

If you’re into algo trading, check out our guide to the Top 10 Best Backtesting APIs for Algo Traders in 2025. Your next trading strategy starts here!