Implementing RAG Systems with Unstructured Data: A Comprehensive Guide

Table of Contents

In today’s digital landscape, organizations face a growing challenge: extracting meaningful insights from vast repositories of unstructured data. While Large Language Models (LLMs) have revolutionized how we process information, their true potential is unlocked when combined with Retrieval-jjAugmented Generation (RAG) systems. This guide explores how modern RAG implementations are evolving beyond simple text documents to handle the complex, multimodal nature of real-world data.

The Evolution of RAG Systems: Beyond Text

Traditional RAG systems were conceived with a straightforward purpose: enhance LLM responses by retrieving relevant information from text-based knowledge bases. However, the real world rarely presents information in such a neat, organized format. Modern organizations store their institutional knowledge across various media types – from PDF reports and spreadsheets to images, videos, and audio recordings. This diversity of data formats has pushed RAG systems to evolve, developing capabilities to process and understand multiple modalities simultaneously. The modern RAG system can now interpret images with embedded text, transcribe and analyze customer service calls, extract insights from video content, and even understand technical drawings and schematics, representing a quantum leap from its text-only predecessors.

Technical Architecture and Core Components

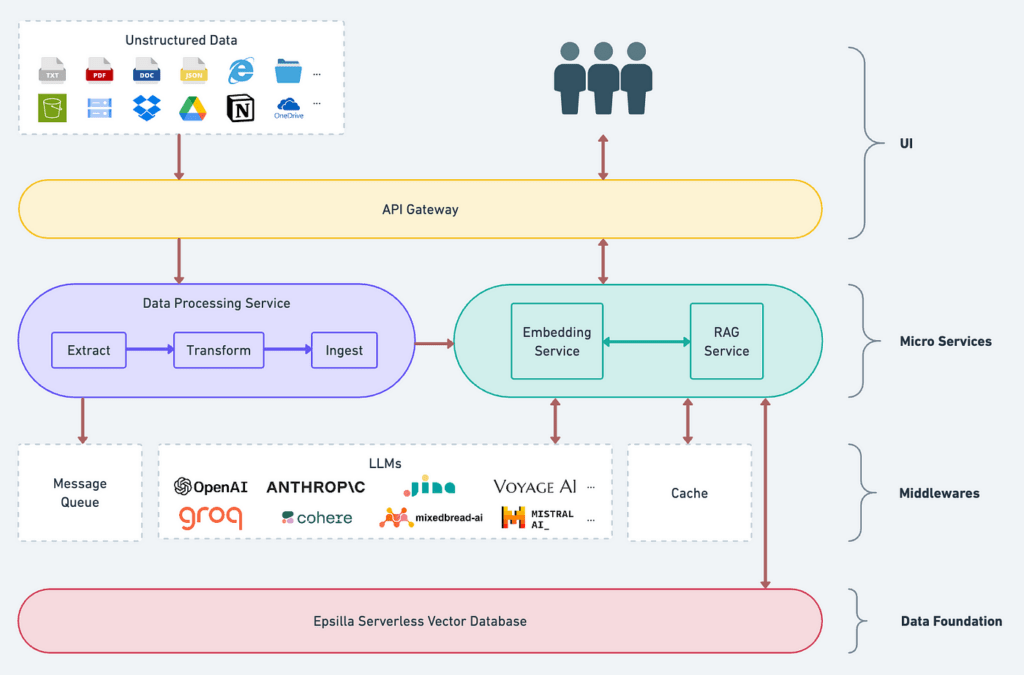

Core System Architecture

Modern RAG systems are built upon a sophisticated architecture that transforms raw, unstructured data into meaningful, searchable information. This architecture represents a significant advancement over traditional text-based systems, enabling organizations to process and understand diverse data types efficiently.

Data Ingestion Pipeline

The data ingestion pipeline acts as the system’s gateway, equipped with specialized processors for different data formats. Whether handling PDFs, images, or audio files, each processor performs its specific task while maintaining quality control. During this stage, the system extracts crucial metadata and establishes relationships between different content pieces, creating a foundation for effective information retrieval. Tools like FAISS (Facebook AI Similarity Search) have become instrumental in this process, offering highly efficient similarity search capabilities for large-scale vector data.

Vector Storage and Indexing

The processed data is then converted into vector embeddings – mathematical representations that capture the content’s meaning. These embeddings are stored in specialized databases that enable quick similarity searches. This approach allows the system to create comparable representations of different content types, from text documents to images, enabling unified search capabilities across all media formats. Modern vector databases like ChromaDB have simplified this process, offering robust solutions for storing and querying vector embeddings with minimal setup overhead.

Context Assembly Engine

At the heart of the system, the context assembly engine makes intelligent decisions about combining and presenting information. It breaks down large documents while preserving their meaning, understands relationships between different content pieces, and ensures retrieved information maintains its relevance and coherence. This component is crucial for delivering accurate and contextually appropriate responses to queries.

Processing and Retrieval

When handling queries, the system leverages its vector storage to identify relevant information through semantic understanding rather than simple keyword matching. It then assembles this information into coherent responses, combining content from multiple sources while maintaining proper context. The entire process is managed by a robust integration layer that ensures smooth communication between components and enables seamless integration with existing organizational tools.

Through this streamlined architecture, modern RAG systems can process and understand content in ways that traditional systems cannot, opening new possibilities for knowledge management and information discovery.

Challenges in Implementing RAG Systems for Unstructured Data

Data Quality Issues

The foundation of any effective RAG system lies in the quality of its input data. Organizations frequently encounter documents in inconsistent formats, ranging from PDFs and Word files to scanned images and handwritten notes. This inconsistency is compounded by varying quality levels across different media types – from poor audio recordings to blurry scanned documents. Missing metadata and context further complicate the situation, making it difficult to establish relationships between different pieces of information and maintain the original meaning of the content.

Processing Overhead

Converting unstructured data into a format suitable for RAG systems demands significant computational resources. Tasks like audio transcription, image processing, and text extraction require substantial CPU and GPU power. This processing overhead grows exponentially as the volume of data increases, leading to potential bottlenecks in the system. Organizations must carefully balance the need for quick processing with available computational resources, often leading to difficult decisions about processing priorities and resource allocation.

Storage Management

As organizations process and store more unstructured data, storage costs can quickly escalate. Vector embeddings, which are essential for efficient information retrieval, require significant storage space and specialized database systems. The challenge extends beyond mere storage capacity – organizations must also maintain high-speed access to this data while ensuring proper backup and recovery mechanisms are in place. This balancing act between storage costs, accessibility, and data security presents a constant challenge for system administrators.

Integration Complexity

Connecting multiple data sources while maintaining system performance proves to be a significant challenge. Organizations typically need to integrate various internal systems, each with its own data format and access protocols. This integration must be seamless enough to allow real-time data processing while being robust enough to handle system failures and data inconsistencies. The complexity increases when dealing with legacy systems or when real-time synchronization is required across different platforms.

Performance Optimization

Maintaining optimal system performance becomes increasingly challenging as the volume and variety of data grow. Organizations must constantly tune their systems to ensure quick response times while maintaining accuracy in information retrieval. This involves optimizing various components like embedding models, search algorithms, and caching strategies. The challenge lies in finding the right balance between speed and accuracy while keeping resource usage within acceptable limits.

Maintenance and Updates

Keeping a RAG system current requires regular updates and maintenance. As new types of data emerge and existing formats evolve, the system must adapt accordingly. This includes updating embedding models, modifying processing pipelines, and adjusting integration points with other systems. Regular monitoring and maintenance are essential to ensure the system continues to perform effectively, but this ongoing effort requires significant time and resource investment.

Scaling and Performance Optimization

Building a RAG system that can grow with an organization’s needs requires careful attention to scalability and performance. A modular architecture allows for separate processors handling different data types while maintaining flexibility in embedding models and storage backends. Performance optimization becomes crucial as data volumes grow, necessitating intelligent caching strategies, batch processing for resource-intensive operations, and asynchronous processing pipelines. Regular monitoring and optimization ensure the system continues to perform efficiently as it scales, while proper data governance and security measures protect sensitive information and maintain compliance with regulatory requirements.

Real-World Impact and Future Directions

The practical impact of implementing RAG systems for unstructured data is substantial. Organizations have reported significant improvements in information retrieval efficiency, with some seeing up to 40% reduction in time spent searching for relevant information. Technical teams have experienced enhanced decision-making capabilities through better access to historical data and insights. Looking forward, the field continues to evolve with emerging trends in multimodal understanding, zero-shot learning, and edge processing. Research efforts are focusing on improving cross-modal retrieval, developing more efficient embedding techniques, and optimizing context selection methods. These advances promise to make RAG systems even more powerful tools for unlocking the value hidden in unstructured data.

The journey of implementing RAG for unstructured data is complex but rewarding. Success requires a balanced approach that considers technical capabilities, organizational needs, and practical constraints. By understanding these aspects and following proper implementation strategies, organizations can build systems that truly harness the power of their unstructured data, turning information chaos into valuable insights that drive better decision-making and improved outcomes.