Langfuse: Transforming LLM Development Through Advanced Observability and Control

The landscape of artificial intelligence has been transformed by Large Language Models (LLMs), which have become essential components of modern applications. However, this transformation brings unique challenges that traditional development tools struggle to address. Enter Langfuse, an innovative open-source platform that’s revolutionizing how developers manage and optimize their LLM applications.

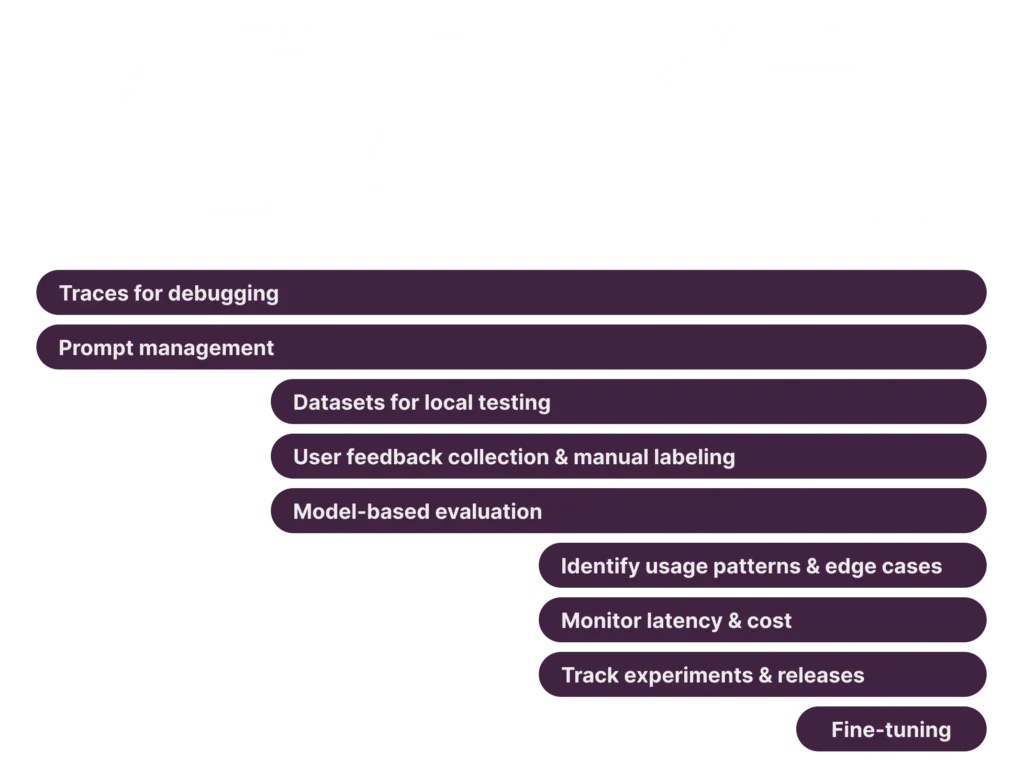

Langfuse represents a paradigm shift in LLM application development, offering a comprehensive solution that addresses the complex needs of modern AI-powered applications. By combining sophisticated monitoring tools, advanced debugging capabilities, and intelligent prompt management, Langfuse enables developers to build more reliable and efficient applications while maintaining full visibility into their operations.

Table of Contents

Core Platform Features

The foundation of Langfuse’s success lies in its thoughtfully designed core features that address the fundamental challenges of LLM application development. At its heart, the platform provides robust development tools that give developers unprecedented control over their applications.

The platform’s observability features serve as its cornerstone, offering developers a clear window into their applications’ behavior. Through sophisticated SDKs available for both Python and JavaScript/TypeScript, developers can seamlessly instrument their applications to capture every crucial interaction with their LLMs. This instrumentation goes beyond simple logging, providing context-rich information that proves invaluable during development and debugging.

The Langfuse UI transforms complex application data into actionable insights through its intuitive interface. Developers can navigate through user sessions, examine logs, and analyze performance metrics with ease. This visual approach to application monitoring makes it possible to identify patterns and issues that might otherwise remain hidden in traditional logging systems.

The platform’s monitoring capabilities extend far beyond basic metrics tracking. Through its comprehensive analytics suite, Langfuse provides deep insights into:

Application Performance Metrics:

- Real-time response latency monitoring

- Resource utilization patterns

- Cost optimization opportunities

- Quality consistency tracking

The testing framework represents another crucial aspect of the platform, enabling thorough experimentation and validation before deployment. Developers can use robust benchmarking tools to ensure their applications meet performance standards and quality expectations, while dataset-based testing allows for comprehensive validation of model outputs.

Advanced Tracing and Debugging

Langfuse’s tracing system stands as one of its most sophisticated features, offering unprecedented visibility into LLM application execution. The system creates a comprehensive view of how data flows through an application, enabling developers to understand complex interactions and identify optimization opportunities.

Consider a typical LLM application that processes user queries through multiple stages: input preprocessing, context retrieval, LLM interaction, and response formatting. Langfuse’s tracing system captures each step of this process, creating a detailed map of the application’s behavior. This level of visibility proves invaluable when debugging complex issues or optimizing application performance.

The interactive debugging environment transforms how developers approach problem-solving in LLM applications. Rather than relying on traditional debugging methods, developers can observe their applications in real-time, inspecting variables and evaluating expressions as the application processes requests. This immediate feedback loop significantly reduces the time required to identify and resolve issues.

Performance analytics in Langfuse go beyond simple metrics tracking to provide actionable insights. The platform aggregates data from multiple sources to create a comprehensive view of application performance, enabling teams to:

Make Data-Driven Decisions: Through detailed performance dashboards and custom metric tracking, teams can identify trends and patterns that inform optimization strategies.

Optimize Resource Utilization: By analyzing resource usage patterns, teams can make informed decisions about scaling and resource allocation.

Monitor Quality Metrics: Continuous tracking of output quality helps maintain high standards and identify areas for improvement.

Prompt Management and Optimization

Prompt management in Langfuse represents a sophisticated approach to one of the most crucial aspects of LLM applications. The platform provides a comprehensive environment for creating, testing, and deploying prompts, enabling teams to maintain consistent quality while promoting collaboration.

The version control system for prompts operates similarly to modern code version control, allowing teams to track changes, experiment with variations, and roll back when necessary. This systematic approach to prompt management ensures that teams can innovate while maintaining stability in their applications.

When it comes to testing and deploying prompts, Langfuse offers a methodical approach that combines rigorous validation with flexible deployment options. Teams can conduct A/B testing to compare prompt variations, measure performance metrics, and make data-driven decisions about which prompts to deploy.

Implementation and Integration Guide

Implementing Langfuse requires careful planning and consideration of organizational needs. The platform offers flexible deployment options to accommodate different requirements:

Self-hosted Installation: Organizations can maintain complete control over their data and infrastructure through self-hosted deployments using Docker containers or cloud platforms like AWS, GCP, and Azure.

Managed Cloud Service: For teams looking for a more hands-off approach, the managed cloud service provides automatic updates and infrastructure management, along with a generous free tier for smaller projects.

The integration process begins with selecting the appropriate SDK for your technology stack. Langfuse provides comprehensive support through:

A rich Python SDK that offers full platform functionality JavaScript/TypeScript SDK for web applications RESTful API for custom integrations WebSocket support for real-time applications

Real-world Applications and Success Stories

The true value of Langfuse becomes evident when examining its real-world applications. Organizations across various sectors have leveraged the platform to build and maintain sophisticated LLM applications that serve diverse needs.

In the realm of conversational AI, companies have used Langfuse to develop and optimize customer service chatbots that handle thousands of interactions daily. The platform’s comprehensive monitoring and debugging capabilities have enabled these organizations to maintain high service quality while continuously improving their systems based on real-world usage patterns. Content generation applications represent another area where Langfuse has proved invaluable. Teams developing automated content creation systems have utilized the platform’s prompt management and testing capabilities to ensure consistent output quality while maintaining efficient resource utilization.

The success metrics reported by organizations using Langfuse tell a compelling story of improvement across multiple dimensions. Many teams report significant reductions in debugging time, improved application reliability, and more efficient resource utilization. These improvements translate directly into better user experiences and more cost-effective operations.

Conclusion: Transforming LLM Development with Langfuse

Langfuse represents a significant leap forward in LLM application development, standing alongside other innovative tools in the modern AI ecosystem. While platforms like Microsoft AutoGen excel in multi-agent workflows and Microsoft Semantic Kernel focuses on AI orchestration, Langfuse specializes in providing comprehensive observability and control for LLM applications.

The platform’s integrated approach combines:

- Sophisticated tracing for complete visibility

- Real-time debugging tools for rapid development

- Comprehensive analytics for data-driven decisions

- Robust prompt management for consistent quality

- Flexible deployment options for varying needs

For teams looking to build production-grade LLM applications, Langfuse provides the essential tools needed to succeed in this rapidly evolving landscape. Its open-source nature and active community ensure continuous evolution alongside the changing demands of AI development.